Late yesterday the European Union announced an “unprecedented” step against Russian disinformation, saying it would ban Kremlin-based media outlets, Russia Today (aka RT) and Sputnik — extending measures targeting the country following president Putin’s invasion of Ukraine.

“The state-owned Russia Today and Sputnik, as well as their subsidiaries will no longer be able to spread their lies to justify Putin’s war and to saw division in our Union,” said the EU’s president, Ursula von der Leyen. “So we are developing tools to ban their toxic and harmful disinformation in Europe.”

Details on the EU’s planned ban on Russian state media content are still thin on the ground.

At the time of writing EU officials we’ve spoken to were unable to confirm whether or not the ban will extend to online platforms such as Google’s YouTube — where RT and Sputnik both have a number of channels, and the US platform hosts thousands of videos they upload for viewing on demand as well as enabling them to reach viewers via livestreaming.

However in the past few hours EU officials have been cranking up the public pressure on mainstream tech platforms on the disinformation issue.

Today the office of internal market commissioner Thierry Breton announced that both he and the values & transparency commissioner, Vera Jourova, had spoken to the CEOs of Google and YouTube urging them to step up efforts against Russian propaganda.

On Saturday Google announced it had demonetized RT and other Russian-backed channels, meaning they can no longer receive ad revenue via its platforms or ad networks. But in a video call with the two tech CEOs Breton it reported to have said they need to go further, per Reuters.

“Freedom of expression does not cover war propaganda. For too long, content from Russia Today and other Russian state media has been amplified by algorithms and proposed as ‘recommended content’ to people who had never requested it,” the commissioner said in a statement after the call.

“War propaganda should never be recommended content — what is more, it should have no place on online platforms at all. I count on the tech industry to take urgent and effective measures to counter disinformation.”

The EU’s high commissioner for foreign affairs, Josep Borrell, was also asked for details of the ban by the BBC this morning and also declined to specify if it will apply online.

“We’ll do whatever we can to prevent them to disseminate [toxic content to] a European audience,” he told BBC Radio 4’s Today program when questioned on how workable a ban would be, adding: “We have to try to cut them.”

He also aggressively rebutted a line of questions querying the consistency of the EU’s move vis-a-vis Western liberal democratic principles which center free speech — saying the two channels do not distribute free information but rather pump out “massive disinformation” and create an “atmosphere of hate” that he asserted is “against the freedom of thinking” and is “toxifying minds”.

“If you start telling lies all the time, if you create an atmosphere of hate this has to be forbidden,” Borrell added.

The EU’s previous proposals for dealing with online disinformation have largely focused on getting voluntary buy-in from the tech sphere, through a Code of Practice on disinformation. However it has been pressing for tougher action in recent years, especially around COVID-19 disinformation which presents a clear public safety danger.

But an out-and-out ban on media entities — even those clearly linked to the Kremlin — is a major departure from the usual Commission script.

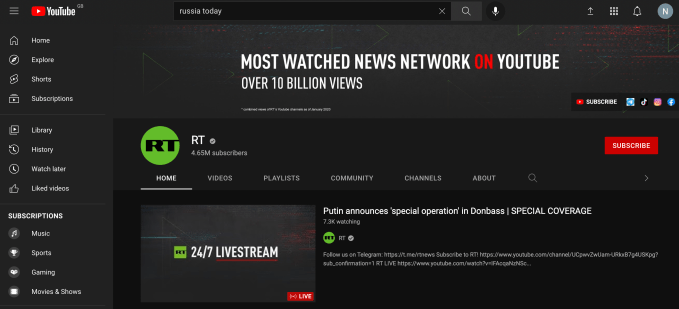

Of the two sanctioned channels, Russia Today appears to garner the most eyeballs on YouTube — where its main channel has some 4.65M subscribers, although content produced by the state-backed media outlet can be found far more widely across the platform.

The channel’s marketing even claims it is “the most watched news network on YouTube” — with a touted 10BN+ views — which looks more than a little awkward for Google in the context of Putin’s land war in Europe.

Screengrab: Natasha Lomas/TechCrunch

We reached out to Google to ask if it intends to take further action against the Kremlin-backed media channels in light of the EU’s decision to ban them.

At the time of writing the company had not responded but we’ll update this report if it does.

While the Kremlin deploys the very thinly veiled camouflage of (claimed) media operations to launder its propaganda as professional news commentary, its infowars tactics are of course far more sprawling online — where multiple user generated platforms provide Putin with (essentially) limitless opportunities to pass his influence ops off as genuine public opinion by making his propaganda look like organic user generated content.

That means, even if pressure from the EU forces mainstream platforms like YouTube to ban RT and Sputnik it can’t hope to stop the Kremlin’s propaganda machine — it will just drive Russia to produce more less directly attributable propaganda, via sock puppet/fake accounts and the like.

And — notably — among the new sanctions that the EU announced at the weekend it added the notorious Russian troll factory, the Internet Research Agency, to the expanded list — along with its oligarch funder.

Although that took isn’t likely to be the only troll farm operating at Russia’s bidding, even if it is the most well known. Reports have long suggested Russian’s web brigade has used outsourcing tactics to try to better cover its manipulative tracks, for example.

In a small sign of some of the less immediately visible Kremlin-backed propaganda activity dialling up around the Ukraine war, Facebook’s parent company Meta put out an update today — saying the teams it has monitoring disinformation (aka “coordinated inauthentic behavior”, as Facebook terms it) have been on “high alert” since the invasion, and have taken down a network, of circa 40 accounts/Pages/Groups on Facebook and Instagram, being run out of Russia, which had been targeting people in Ukraine.

In a neat illustration of the malicious duality that can be applied to even disinformation reporting tools, Meta said the Russian network it identified had been reporting Ukrainians for violating its policies on coordinated inauthentic behavior — including by posing as independent news outlets.

“They ran websites posing as independent news entities and created fake personas across social media platforms including Facebook, Instagram, Twitter, YouTube, Telegram and also Russian Odnoklassniki and VK,” write Nathaniel Gleicher, Meta’s head of security policy, and David Agranovich, a director, threat disruption.

“They were operated from Russia and Ukraine and targeted people in Ukraine across multiple social media platforms and through their own websites. We took down this operation, blocked their domains from being shared on our platform, and shared information with other tech platforms, researchers and governments. When we disrupted this network on our platform, it had fewer than 4,000 Facebook accounts following one of more of its Pages and fewer than 500 accounts following one or more of its Instagram accounts.”

Meta also said some of the accounts it found using fictitious personas had also used profile pictures which is suggests were generated using artificial intelligence techniques like generative adversarial networks (GAN).

“They claimed to be based in Kyiv and posed as news editors, a former aviation engineer, and an author of a scientific publication on hydrography — the science of mapping water. This operation ran a handful of websites masquerading as independent news outlets, publishing claims about the West betraying Ukraine and Ukraine being a failed state,” it adds.

“Our investigation is ongoing, and so far we’ve found links between this network and another operation we removed in April 2020, which we then connected to individuals in Russia, the Donbass region in Ukraine and two media organizations in Crimea — NewsFront and SouthFront, now sanctioned by the US government.”

Meta’s security update also warns that it’s seen increased targeting of people in Ukraine — including Ukrainian military and public figures — by Ghostwriter, a threat actor it notes has been tracked for some time by the security community.

“We detected attempts to target people on Facebook to post YouTube videos portraying Ukrainian troops as weak and surrendering to Russia, including one video claiming to show Ukrainian soldiers coming out of a forest while flying a white flag of surrender,” it notes, adding: “We’ve taken steps to secure accounts that we believe were targeted by this threat actor and, when we can, to alert the users that they had been targeted. We also blocked phishing domains these hackers used to try to trick people in Ukraine into compromising their online accounts.”

Twitter also confirmed that it has taken some action against suspected Russian disinformation on its platform since the invasion when we asked.

A spokesperson for the social network told us:

“On Feb. 27, we permanently suspended more than a dozen accounts and blocked the sharing of several links in violation of our platform manipulation and spam policy. Our investigation is ongoing; however, our initial findings indicate that the accounts and links originated in Russia and were attempting to disrupt the public conversation around the ongoing conflict in Ukraine. As is standard, further details will be shared through our information operations archive once complete.”

Twitter said it will be continuing to monitor its platform for “emerging narratives” which violate platform rules as the situation in Ukraine develops — such as rules on synthetic and manipulated media and its platform manipulation policy — adding that it will take enforcement action when it identifies content and accounts that violate its policies.

The platform does already label state-affiliated accounts belonging to the Russian Federation.

Meta, meanwhile, faced restrictions on its service inside Russia Friday — after the state internet regulator appears to have retaliated over fact-checking labels Facebook had placed on four Kremlin-linked media firms.

On Saturday, Twitter also said access to its service had been restricted in Russia following street protests against the war.

Both Meta and Twitter are urging users to beef up their account security in light of the threat posed by Russian cyberops, suggesting people be cautious about accepting Facebook friend requests from people they don’t know, for example, and implementing 2FA on their accounts to add an additional security layer.

We also reached out to TikTok to ask if it’s taken any measures against Russian propaganda but at the time of writing it had not responded.

The Russian military has been a keen user of TikTok in recent years as Putin’s propaganda tacticians leveraged the viral video clip sharing platform to crank up a visual display of power which looked intended to psyche out and sap the will of Ukraine to resist Russian aggression.

Albeit, if anti-Ukraine ‘psyops’ was the primary goal of Russian military TikTokking the tactic appears to have entirely failed to hit the mark.

English (US) ·

English (US) ·