Today we’re publishing our Community Standards Enforcement Report for the first quarter of 2022. It shows how we enforced our policies from January through March of 2022 across 14 policy areas on Facebook and 12 on Instagram. We’re also sharing several other reports in our Transparency Center including:

- The Widely Viewed Content Report for the first quarter of 2022

- The Oversight Board’s Quarterly Report for the first quarter of 2022, where we provide an update on our responses to 55 recommendations issued by the board. This report shows that in response to board recommendations, we have initiated two new in-depth policy reviews, which will likely result in meetings of the Policy Forum to consider specific changes. It also details new research projects we’ve undertaken, both qualitative and quantitative, to better understand how we can incorporate people’s voices into our appeals and review processes.

- Several reports covering the second half of 2021 on topics ranging from government requests for user data, how we’re protecting people’s intellectual property, how often our services were disrupted, when we had to restrict content based on local laws in a particular country

- And a new Transparency Center reports page that makes country-specific regulatory reports more accessible

We’re also releasing the findings from EY’s independent assessment of our enforcement reporting. Last year, we asked EY to verify the metrics of our Community Standards Enforcement Report since we don’t believe we should grade our own homework. EY’s assessment concluded that the calculation of the metrics have been prepared based on the specified criteria and the metrics are fairly stated, in all material respects. As we keep growing this report, we will also keep working on ways to make sure that it’s accurately presented and independently verified.

Highlights From the Report

The prevalence of violating content on Facebook and Instagram remained relatively consistent but decreased in some of our policy areas from Q4 2021 to Q1 2022.

On Facebook in Q1 we took action on:

- 1.8 billion pieces of spam content, which was an increase from 1.2 billion in Q4 2021, due actions on a small number of users making a large volume of violating posts

- 21.7 million pieces of violence and incitement content, which was an increase from 12.4 million in Q4 2021, due to the improvement and expansion of our proactive detection technology.

On Instagram in Q1 we took action on:

- 1.8 million pieces of drug content, which was an increase from 1.2 million from Q4 2021, due to updates made to our proactive detection technologies.

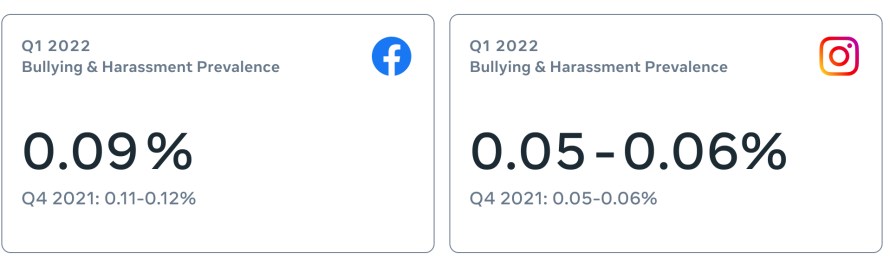

We also saw an increase in the proactive detection rate of bullying and harassment content from 58.8% in Q4 2021 to 67% in Q1 2022 thanks to improvement and expansion of our proactive detection technology. We also continued to see a slight decrease in prevalence on Facebook from 0.11-0.12% in Q4 2021 to 0.09% in Q1 2022.

Refining Our Policies and Enforcement

Over the years we’ve invested in building technology to improve how we can detect violating content. With this progress we’ve known that we’ll make mistakes, so it’s been equally important along the way to also invest in refining our policies, our enforcement and the tools we give to users.

For example, we’ve improved our transparency over the years to better inform people why we took down a post and we’ve improved the ability to appeal and ask us to take another look. We include metrics about appeals in this report. Recently we’ve begun to evaluate the effectiveness of our penalty system more deeply, for example testing the impact of giving people additional warnings before triggering more severe penalties for violating our policies.

Last year we saw how policy refinements can ensure that we aren’t over enforcing beyond what we intend. Updates we made to our bullying and harassment policy better accounted for language that can be easily misunderstood without context. Enforcement systems also play a role: we recently began testing new AI technology that identifies and prevents potential over-enforcement by better learning from content that’s appealed and subsequently restored. For example, the same word that is an offensive slur in the U.S. is also a common British term for cigarette which would not violate our policies. We’re also testing a number of improvements to our proactive enforcement, to enable some admins of Groups to better shape group culture and take context into account around what is and isn’t allowed in their space; or to better reflect the context in which people write comments between friends, where sometimes good-natured banter could be mistaken as violating content.

Looking Ahead

For a long time, our work has focused on measuring and reducing the prevalence of violating content on our services. But we’ve worked just as hard to improve the accuracy of our enforcement decisions. While prevalence helps us measure what we miss, we’ve also been working on developing robust measurements around mistakes, which will help us better understand where we act on content incorrectly. We believe sharing metrics around both prevalence and mistakes will provide a more complete picture of our overall enforcement system and help us improve, so we are committed to providing this in the future.

Finally, as new regulations continue to roll out around the globe, we are focused on the obligations they create for us. So we are adding and refining processes and oversight across many areas of our work. This will enable us to make continued progress on social issues while also meeting our regulatory obligations more effectively.

The post Community Standards Enforcement Report, First Quarter 2022 appeared first on Meta.

English (US) ·

English (US) ·