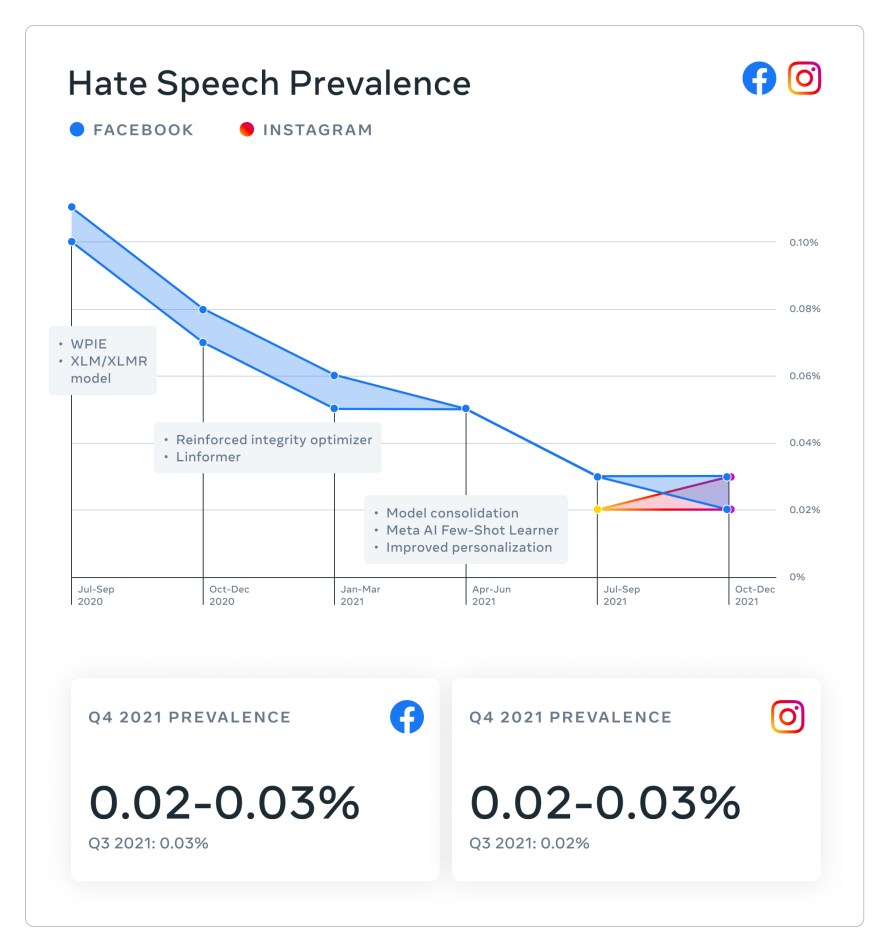

- Prevalence of harmful content on Facebook and Instagram remained relatively consistent — and decreased in some of our policy areas — from Q3 to Q4, partly due to our improved and expanded proactive detection technologies.

Today we’re publishing our Community Standards Enforcement Report for the fourth quarter of 2021 and provides metrics on how we enforced our policies from October 2021 through December 2021 across 14 policy areas on Facebook and 12 on Instagram.

We’re also sharing:

- The Widely Viewed Content Report for the fourth quarter of 2021

- The Oversight Board Quarterly Report covering the fourth quarter of 2021

- A post on our Design Blog about our integrity design efforts to prevent misuse of our technologies and promote effective and fair enforcement

All of these reports are available in the Transparency Center.

Highlights From the Report

Prevalence of harmful content on Facebook and Instagram remained relatively consistent — and decreased in some of our policy areas — from Q3 to Q4, meaning the vast majority of content that users generally encounter does not violate our policies.

We continued to see steady numbers on content we took action on across many areas.

On Facebook in Q4 we took action on:

- 4 million pieces of drug content, an increase from 2.7 million in Q3 2021, due to improvements made to our proactive detection technologies.

- 1.5 million pieces of firearm-related content, an increase from 1.1 million in Q3, due to improved and expanded proactive detection technologies.

- 1.2 billion pieces of spam content, an increase from 777 million in Q3, due to a large amount of content removed in December.

On Instagram in Q4 we took action on:

- 905K pieces of content related to terrorism, an increase from 685K pieces of content in Q3 due to improvements made to our proactive detection technologies.

- 195K pieces of firearm-related content, an increase from 154K in Q3, due to improved and expanded proactive detection technologies.

In the Q3 report, we began reporting prevalence metrics on bullying and harassment for the first time. In Q4, bullying and harassment prevalence on Instagram remained relatively consistent over last quarter at 0.05-0.06%. On Facebook, prevalence of bullying & harassment was at 0.11-0.12% in Q4, down from 0.14-0.16% in Q3. The reduction in prevalence was in part due to policy updates accounting for language that, without additional context, could be considered offensive, but in reality is used in a familiar or joking way between friends.

Our Steady Improvement in Reducing Harmful Content

Our steady improvement can be attributed to a holistic approach including our policies, the technology that helps us enforce them, the operational piece of global content moderation and a product design process that focuses on safety and integrity

With advancements in AI technologies, like our Few Shot Learner and shift to generalized AI, we’ve been able to correctly take action on harmful content more quickly.

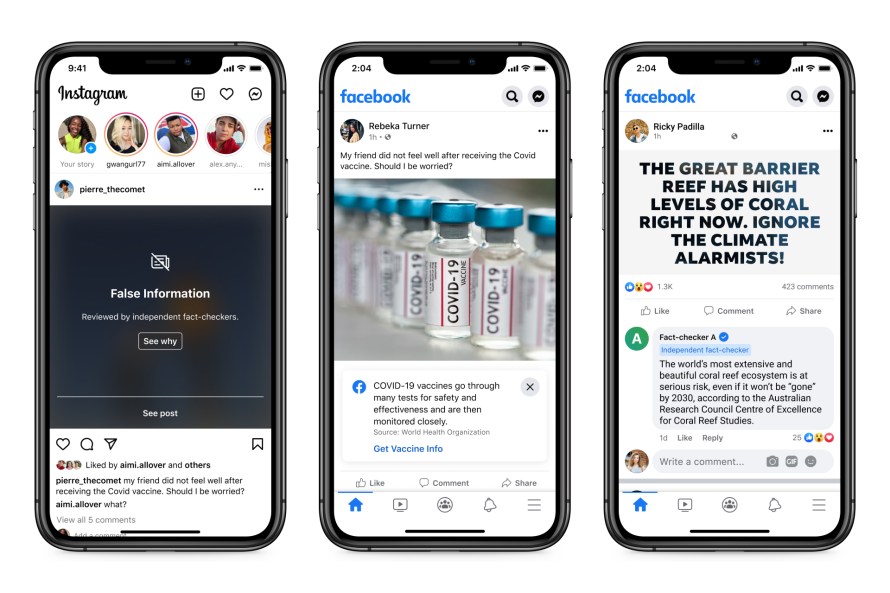

Our product design teams play an important role in our effort to reduce harmful content. By carefully designing our social media products, we can promote greater safety while providing people with context, control and the room to share their voice. For example, when applied, informative overlays and labels provide more context and limit exposure to misinformation or graphic content. Read more about our integrity design process.

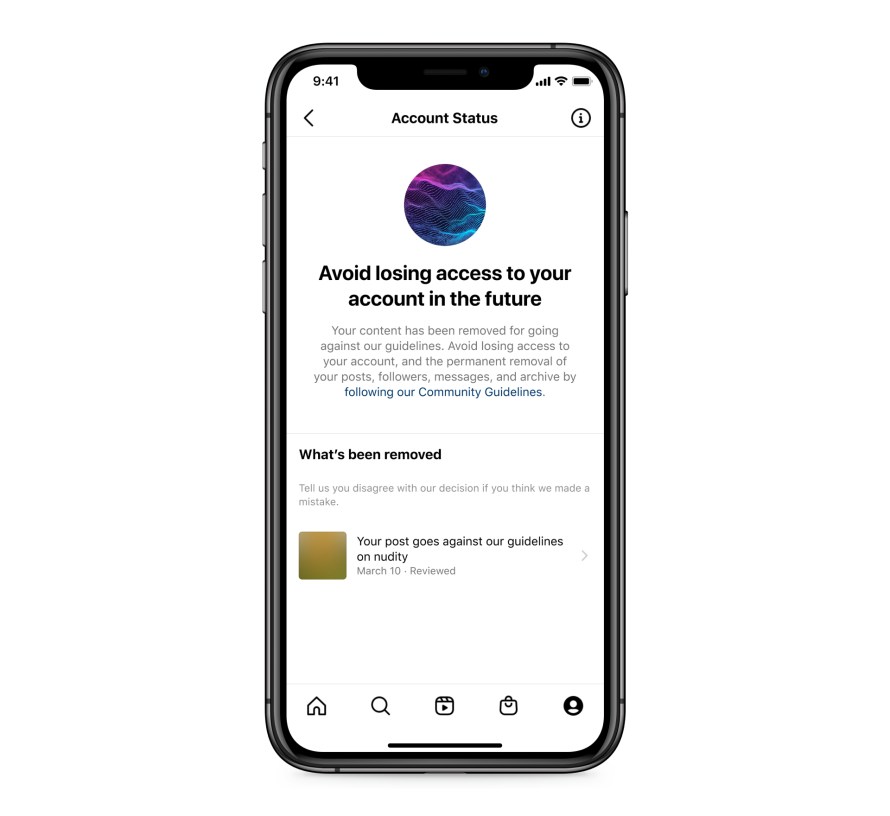

Last fall, we launched Account Status on Instagram, which helps people understand why content has been removed and what policies it violates. We know this kind of transparency is important, because it helps people better understand our policies, and it also helps our systems by giving us indications when we’ve gotten things wrong. Following this launch, we saw an increase of restored content on Instagram across several policy areas, including violence and incitement, hate speech, bullying and harassment and adult nudity and sexuality.

We’re always trying to identify policies we may be under- or over-enforcing so that we can improve. For example, in 2020 during Breast Cancer Awareness Month, there was an influx of breast cancer-related content on Instagram, including images containing adult nudity that were medical in nature. While these don’t violate our policies, many were enforced on incorrectly by our systems, which led to content being taken down from breast cancer support accounts and individual cancer survivors. We heard from our community that we needed to improve on how we enforce this type of content. The Oversight Board also heard a case related to breast cancer symptoms and nudity and asked us to improve our enforcement accuracy.

Since then, we’ve been working to improve the accuracy of enforcement on health content, including content related to breast cancer and surgeries. We defined and clarified our exception for medical nudity by training our AI systems to better recognize exposed chests with mastectomy scars and escalating content flagged as medical nudity for human review to further improve accuracy. We also send content with frequently-used keywords for human review. Finally, we trained our systems to better recognize benign and non-violating images and avoid overenforcement in the future. As a result, we saw significantly less overenforcement during last year’s Breast Cancer Awareness Month, which empowered our community to share content openly.

Integrity and Transparency Looking Forward

We’re constantly looking for new ways to improve our transparency and the integrity of our platforms. For many years, we’ve published biannual transparency reports, which include the volume of content restrictions we make when content is reported as violating local law but doesn’t go against our Community Standards. We’ve now agreed to contribute to Lumen, an independent research project hosted by Harvard’s Berkman Klein Center for Internet & Society, which studies cease-and-desist letters from governments and private actors concerning online content. We’re joining Project Lumen because it allows us to hold governments, courts, regulators and ourselves accountable for the content they request to be removed under local law. We hope to scale up our participation in Lumen over time.

We’ll continue building on our progress to enforce our policies in 2022, as we’re always working to improve, be transparent and share meaningful data.

The post Community Standards Enforcement Report, Fourth Quarter 2021 appeared first on Meta.

English (US) ·

English (US) ·