Today, we’re sharing highlights of our integrity work for the second quarter of 2022, which can all be found in the Transparency Center. This update includes:

- The Community Standards Enforcement Report (CSER) for the second quarter of 2022;

- The Widely Viewed Content Report (WVCR) for the second quarter of 2022;

- The Oversight Board Quarterly Update for the second quarter of 2022;

- Updates on our work to protect safety and expression and

- New data on our third-party fact checker program.

Earlier this month we also released our Quarterly Adversarial Threat Report, which provides an in-depth, qualitative view into the different types of adversarial threats we tackle globally as well as our approach to the upcoming elections in Brazil and the United States.

Community Standards Enforcement Report Highlights

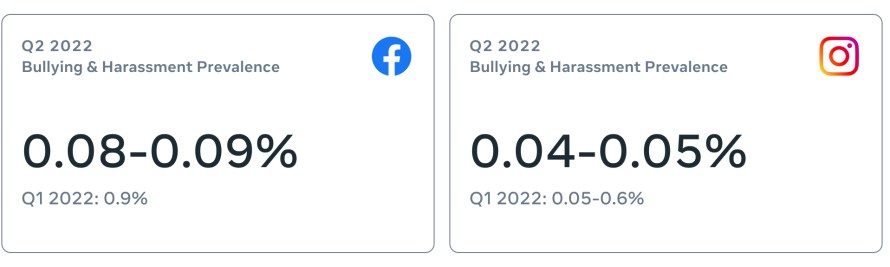

We continue to make steady progress on a number of areas, including bullying and harassment. As we continue to improve our AI technology, the proactive detection rate of bullying and harassment content on Facebook increased from 67% in Q1 2022 to 76.8% in Q2 2022 and on Instagram from 83.8% in Q1 2022 to 87.4% in Q2 2022.

On Facebook in Q2:

- Bullying and Harassment: Prevalence was 0.08-0.09%, from 0.09% in Q1. We took action on 8.2 million pieces of it in Q2, from 9.5 million in Q1.

- Hate Speech: Prevalence remained at 0.02% or two views per 10,000. We took action on 13.5 million pieces of it in Q2, from 15.1 million pieces in Q1.

- Violence and Incitement: Prevalence remained 0.03% or three views per 10,000. We took action on 19.3 million pieces of it in Q2, from 21.7 million pieces in Q1.

On Instagram in Q2:

- Bullying and Harassment: Prevalence was 0.04-0.05%, from 0.05-0.6% in Q1. We took action on 6.1 million pieces of it, from seven million in Q1.

- Hate Speech: Prevalence was 0.01-0.02% or one to two views per 10,000, from 0.02% in Q1. We took action on 3.8 million pieces of it in Q2, from 3.4 million pieces in Q1.

- Violence and Incitement: Prevalence remained 0.01-0.02% or two views per 10,000. We took action on 3.7 million pieces of it in Q2, from 2.7 million pieces in Q1.

As part of our work to constantly improve the metrics we share in this report, we have updated our appeals methodology to account for all instances where content was submitted for additional review, including times when people told us they disagreed with our decision.

As new regulations continue to roll out around the globe, we are focused on the obligations they create for us and how those obligations might affect our ability to keep prevalence of violating content on our apps low, minimize enforcement mistakes and conduct sufficient due-diligence as part of our safety and security processes.

Oversight Board Highlights

Expansion of the Board’s Scope: The board will soon issue a new type of binding judgment on cases: whether or not we should apply a warning screen to some pieces of content. While the board has already been able to apply binding decisions on whether to take down or leave up pieces of content, this expansion will empower them further by giving them more input on how content appears and is distributed to people across our platforms.

Newsworthiness: As a result of a recommendation from the Oversight Board, we’re releasing data on the number of newsworthy allowances we made over a calendar year. We’re committing to release these numbers on a regular basis moving forward. From June 2021 to June 2022, we documented 68 newsworthiness allowances, of which, 13 (~20%) of those were issued for posts by politicians. This data, along with examples of and details on these allowances, are now available in our Transparency Center.

Crisis Policy Protocol: During crises, we assess on and off-platform risks of imminent harm and respond with specific policy and product actions. Based on a recommendation from the Oversight Board — and to strengthen our existing work — we are publishing our Crisis Policy Protocol (CPP) to codify our content policy response to crises. This framework helps to assess crisis situations that may require a new or unique policy response. The CPP guides our use of targeted crisis policy interventions in a timely manner that is consistent with observed risks and past interventions. As a result, this helps our crisis policy response to be more calibrated and sustainable, as we seek to balance a consistent global response with adapting to quickly changing conditions. Protocol development included original research, consultations with over 50 global external experts in national security, conflict prevention, hate speech, humanitarian response and human rights. Learn more here and here.

Empowering Expression and Protecting Safety

We are always refining our policies and enforcement so that we’re both supporting people’s ability to express themselves and protecting safety across our platforms. We know we don’t always get it right, and we’re looking into ways we can improve our proactive enforcement, like through applying AI technology.

We found that using warning screens to discourage hate speech or bullying and harassment content prevented some of this content — which could have violated our community standards — from being posted.

We’re also expanding a test of Flagged by Facebook, which allows some group admins to better shape their group culture and take context into account by keeping some content in their groups that might otherwise be flagged for bullying and harassment. For example, through this test an admin for a group of fish tank enthusiasts allowed a flagged comment that called a fish “fatty,” which was not intended to be offensive.

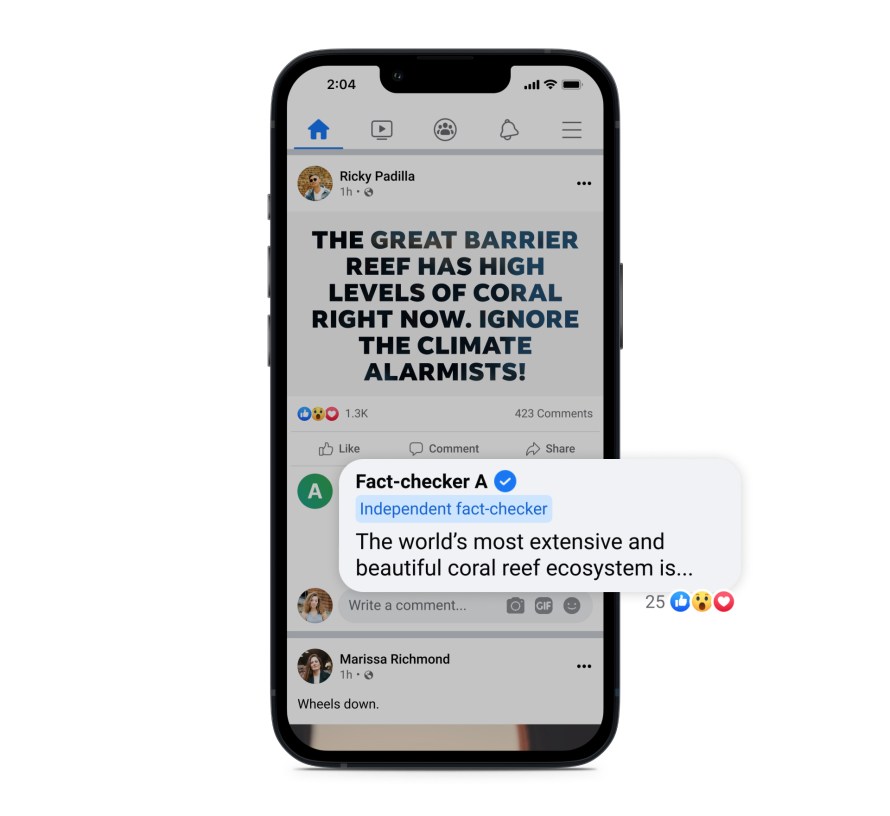

Fact-Checking and Helpful Comments

We have built the largest global fact-checking network of any platform, with more than 90 fact-checking partners around the world who review and rate viral misinformation. In Q2, we displayed warnings on over 200 million distinct pieces of content on Facebook (including re-shares) globally based on over 130,000 debunking articles written by our fact-checking partners.

In the US, we partner with 10 fact-checking organizations, five of which cover content in Spanish. We’re adding TelevisaUnivision as another US partner to cover Spanish language content.

We’ve also launched a pilot program on Facebook that aims to show people more reliable information and empower them to decide what to read, trust and share. A small group of our US third-party fact-checking partners has the ability to comment in English and Spanish to provide more information on public Facebook posts that they determine could benefit from more context. This effort is separate from our third-party fact-checking program. The comments aren’t fact-check ratings. They won’t result in any enforcement penalties for content owners, nor will they impact a post’s distribution or the overall status of a Page. Also, unlike fact-checks, these comments will appear on Facebook posts that may not be verifiably false but that people may find misleading.

The post Community Standards Enforcement Report, Second Quarter 2022 appeared first on Meta.

English (US) ·

English (US) ·