DeepMind has created an AI capable of writing code to solve arbitrary problems posed to it, as proven by participating in a coding challenge and placing — well, somewhere in the middle. It won’t be taking any software engineers’ jobs just yet, but it’s promising and may help automate basic tasks.

The team at DeepMind, a subsidiary of Alphabet, is aiming to create intelligence in as many forms as it can, and of course these days the task to which many of our great minds are bent is coding. Code is a fusion of language, logic and problem-solving that is both a natural fit for a computer’s capabilities and a tough one to crack.

Of course it isn’t the first to attempt something like this: OpenAI has its own Codex natural-language coding project, and it powers both GitHub Copilot and a test from Microsoft to let GPT-3 finish your lines.

DeepMind’s paper throws a little friendly shade on the competition in describing why it is going after the domain of competitive coding:

Recent large-scale language models have demonstrated an impressive ability to generate code, and are now able to complete simple programming tasks. However, these models still perform poorly when evaluated on more complex, unseen problems that require problem-solving skills beyond simply translating instructions into code.

OpenAI may have something to say about that (and we can probably expect a riposte in its next paper on these lines), but as the researchers go on to point out, competitive programming problems generally involve a combination of interpretation and ingenuity that isn’t really on display in existing code AIs.

To take on the domain, DeepMind trained a new model using selected GitHub libraries and a collection of coding problems and their solutions. Simply said, but not a trivial build. When it was complete, they put it to work on 10 recent (and needless to say, unseen by the AI) contests from Codeforces, which hosts this kind of competition.

It placed somewhere in the middle of the pack, just above the 50th percentile. That may be middling performance for a human (not that it’s easy), but for a first attempt by a machine learning model it’s pretty remarkable.

“I can safely say the results of AlphaCode exceeded my expectations,” said Mike Mirzayanov. “I was skeptical because even in simple competitive problems it is often required not only to implement the algorithm, but also (and this is the most difficult part) to invent it. AlphaCode managed to perform at the level of a promising new competitor.”

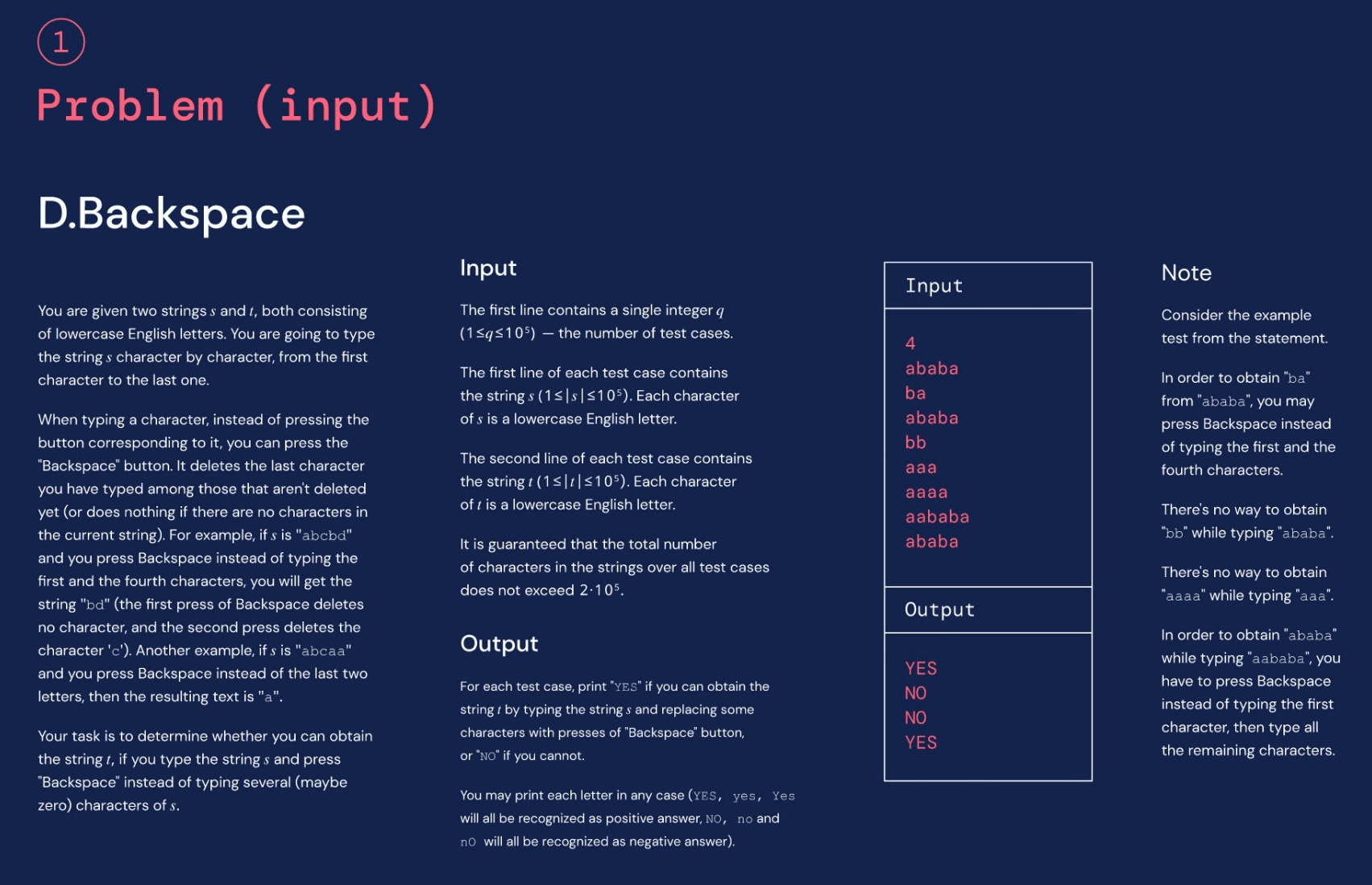

You can see an example of the type of problem AlphaCode solved, and its solution, below:

(Note to DeepMind: SVG is an annoying format for figures like this.)

As you can see, it’s a clever solution but not quite enterprise SaaS-grade stuff. Don’t worry — that comes later. Right now it’s enough to demonstrate that the model is capable of parsing and understanding a complex written challenge all at once and producing a coherent, workable response most of the time.

“Our exploration into code generation leaves vast room for improvement and hints at even more exciting ideas that could help programmers improve their productivity and open up the field to people who do not currently write code,” writes the DeepMind team. They’re referring to me in the last part there. If it can modify responsive layouts in CSS, it’s better than I am.

You can dive deeper into the way AlphaCode was built, and its solutions to various problems, at this demo site.

English (US) ·

English (US) ·