Billions of people use Facebook and Instagram every day to share the ups and downs of life, to connect with people who share their interests, and to discover content they enjoy. To make everyone’s experience on our apps unique and personalized to them, we use AI systems to decide what content appears, informed by the choices they make.

I wrote previously about the relationship between you and the algorithms Meta uses to shape what you see on Facebook and Instagram, and to challenge the myth that algorithms leave people powerless over the content they see. In that piece, I wrote that we needed to be more frank about how this relationship works and to give you more control over what you see.

Today, we’re building on that commitment by being more transparent around several of the AI systems that incorporate your feedback to rank content across Facebook and Instagram. These systems make it more likely that the posts you see are relevant and interesting to you. We’re also making it clearer how you can better control what you see on our apps, as well as testing new controls and making others more accessible. And we’re giving more detailed information for experts so they can better understand and analyze our systems.

This is part of a wider ethos of openness, transparency and accountability. With rapid advances taking place with powerful technologies like generative AI, it’s understandable that people are both excited by the possibilities and concerned about the risks. We believe that the best way to respond to those concerns is with openness. Generally speaking, we believe that as these technologies are developed, companies should be more open about how their systems work and collaborate openly across industry, government and civil society to help ensure they are developed responsibly. That starts with giving you more insight into, and control over, the content you see.

How AI Predictions Influence Recommendations

Our AI systems predict how valuable a piece of content might be to you, so we can show it to you sooner. For example, sharing a post is often an indicator that you found that post to be interesting, so predicting that you will share a post is one factor our systems take into account. As you might imagine, no single prediction is a perfect gauge of whether a post is valuable to you. So we use a wide variety of predictions in combination to get as close as possible to the right content, including some based on behavior and some based on user feedback received through surveys.

We want to be more open about how this works. A model of transparency Meta has been developing and advocating for some time is the publication of system cards, which give people insight into how our systems work in a way that is accessible for those who don’t have deep technical knowledge. Today, we are releasing 22 system cards for Facebook and Instagram. They give information about how our AI systems rank content, some of the predictions each system makes to determine what content might be most relevant to you, as well as the controls you can use to help customize your experience. They cover Feed, Stories, Reels and other surfaces where people go to find content from the accounts or people they follow. The system cards also cover AI systems that recommend “unconnected” content from people, groups, or accounts they don’t follow. You can find a more detailed explanation of the AI behind content recommendations here.

To give a further level of detail beyond what’s published in the system cards, we’re sharing the types of inputs – known as signals – as well as the predictive models these signals inform that help determine what content you will find most relevant from your network on Facebook. The categories of signals we’re releasing represent the vast majority of signals currently used in Facebook Feed ranking for this content. You can find these signals and predictions in the Transparency Center, along with how frequently they tend to be used in the overall ranking process.

We also use signals to help identify harmful content, which we remove as we become aware of it, as well as to help reduce the distribution of other types of problematic or low-quality content in line with our Content Distribution Guidelines. We’re including some examples of the signals we use to do this. But there’s a limit to what we can disclose safely. While we want to be transparent about how we try to keep bad content away from people’s Feeds, we also need to be careful not to disclose signals which might make it easier for people to circumvent our defenses.

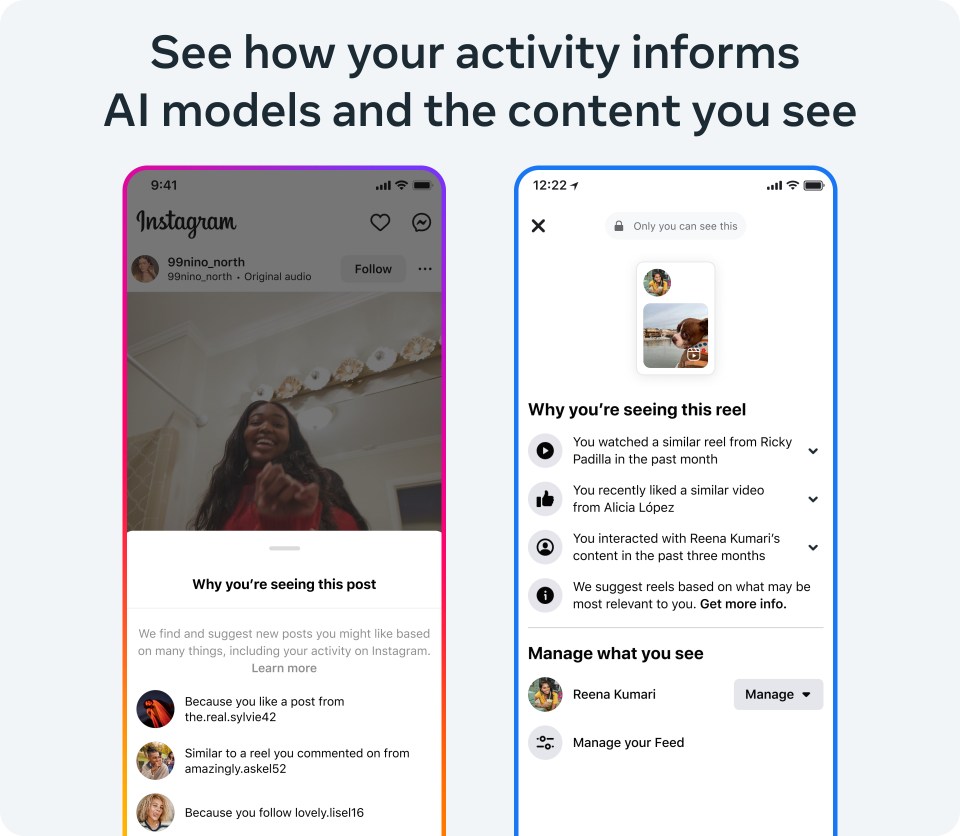

Of course, not everyone will find information just because we publish it on our website. That’s why we make it possible to see details directly in our apps about why our systems predicted content would be relevant to you, and the types of activity and inputs that may have led to that prediction. We’re expanding our “Why Am I Seeing This?” feature in Instagram Reels tab and Explore, and Facebook Reels in the coming weeks, after previously launching it for some Feed content and all ads on both Facebook and Instagram. You’ll be able to click on an individual reel to see more information about how your previous activity may have informed the machine learning models that shape and deliver the reels you see.

Expanding Tools to Personalize Your Experience

By using the tools available, you have the ability to shape your experiences on our apps so you see more of the content you want to see, and less of the content you don’t. To make this easier, we’ve created centralized places on Facebook and Instagram where you can customize controls that influence the content you see on each app. You can visit your Feed Preferences on Facebook and the Suggested Content Control Center on Instagram through the three-dot menu on relevant posts, as well as through Settings.

On Instagram, we’re testing a new feature that makes it possible for you to indicate that you’re “Interested” in a recommended reel in the Reels tab, so we can show you more of what you like. The “Not Interested” feature has been available since 2021. You can learn more about influencing what you see across Instagram here.

So you can customize your experience and the content you see, we also have a “Show more, Show less” feature on Facebook, which is available on all posts in Feed, Video, and Reels via the three-dot-menu. We’re working on ways to make the feature even more prominent. And if you don’t want an algorithmically-ranked Feed – or just want to see what your Feed would look like without it – you can use the Feeds tab on Facebook or select Following on Instagram to switch to a chronological Feed. You can also add people to your Favorites list on both Facebook and Instagram so you can always see content from your favorite accounts.

Providing Better Tools for Researchers

We also believe an open approach to research and innovation – especially when it comes to transformative AI technologies – is better than leaving the know-how in the hands of a small number of big tech companies. That’s why we’ve released over 1,000 AI models, libraries and data sets for researchers over the last decade so they can benefit from our computing power and pursue research openly and safely. It is our ambition to continue to be transparent as we make more AI models openly available in future.

In the next few weeks, we will start rolling out a new suite of tools for researchers: Meta’s Content Library and API. The Library includes data from public posts, pages, groups, and events on Facebook. For Instagram, it will include public posts and data from creator and business accounts. Data from the Library can be searched, explored, and filtered on a graphical user interface or through a programmatic API. Researchers from qualified academic and research institutions pursuing scientific or public interest research topics will be able to apply for access to these tools through partners with deep expertise in secure data sharing for research, starting with the University of Michigan’s Inter-university Consortium for Political and Social Research. These tools will provide the most comprehensive access to publicly-available content across Facebook and Instagram of any research tool we have built to date and also help us meet new data-sharing and transparency compliance obligations.

We hope by introducing these products to researchers early in the development process, we can receive constructive feedback to ensure we’re building the best possible tools to meet their needs.

The post How AI Influences What You See on Facebook and Instagram appeared first on Meta.

English (US) ·

English (US) ·