In December, just ahead of Instagram head Adam Mosseri’s testimony before the U.S. Senate over the impacts of its app on teen users, the company announced plans to roll out a series of safety features and parental controls. This morning, Instagram is updating a critical set of these features which will now default teen users under the age of 16 to the app’s most restrictive content setting. It will also prompt existing teens to do the same, and will introduce a new “Settings check-up” feature that guides teens to update their safety and privacy settings.

The changes are rolling out to global users across platforms amid increased regulatory pressure over social media apps and their accompanying minor safety issues.

In last year’s Senate hearing, Mosseri defended Instagram’s teen safety track record in light of concerns emerging from Facebook whistleblower Frances Haugen, whose leaked documents had painted a picture of a company that was aware of the negative mental health impacts of its app on its younger users. Though the company had then argued it took adequate precautions in this area, in 2021 Instagram began to make changes with regard to teen use of its app and what they could see and do.

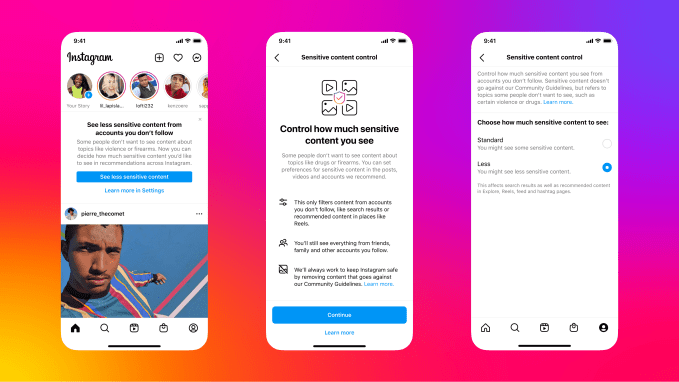

In March of this year, for instance, Instagram rolled out parental controls and safety features to protect teens from interactions with unknown adult users. In June, it updated its Sensitive Content Control, launched the year prior, to cover all the surfaces in the app where it makes recommendations. This allowed users to control sensitive content across places like Search, Reels, Accounts You Might Follow, Hashtag Pages and In-Feed Recommendations.

It’s this Content Control feature that’s receiving the update today.

The June release had put in the infrastructure to allow users to adjust their settings around “sensitive content” — that is, content that could depict graphic violence, is sexualized in nature, or content about restricted goods, among other things. At the time, it presented three options to restrict this content — “More,” “Less,” or “Standard.”

Before, all teens under 18 were only able to choose to see content in the “Standard” or “Less” categories. They could not switch over to “More” until they were an adult.

Image Credits: Instagram

Now, with today’s update, teens under the age of 16 will be defaulted to the “Less” control if they are new to Instagram. (They can still later change this to Standard if they choose.)

Existing teens will be pushed a prompt that encourages them — though does not require — to choose the “Less” control, as well.

As before, this impacts the content and accounts seen across Search, Explore, Hashtag Pages, Reels, Feed Recommendations and Suggested Accounts, Instagram notes.

“It’s all in an effort for teams to basically have a safer search experience, to not see so much sensitive content and to automatically see less than any adult would on the platform,” said Jeanne Moran, Instagram Policy Communications Manager, Youth Safety & Well-Being, in a conversation with TechCrunch. “…we’re nudging teens to choose ‘Less,’ but if they feel like they can handle the ‘Standard’ then they can do that.”

Of course, to what extent this change is effective relies on whether or not teens will actually follow the prompt’s suggestion — and whether they’ve entered their correct age in the app, to begin with. Many younger users lie about their birthdate when they join apps in order to not be defaulted to more restrictive experiences. Instagram has been attempting to address this problem through the use of A.I. and other technologies, including those that now require users to provide their birthdays if they had not, A.I. that scans for possible fake ages (e.g. by finding birthday posts where the age doesn’t match the birthdate on file), and, more recently, via tests of new tools like video selfies.

The company hasn’t said how many accounts it’s caught and adjusted through the use of these technologies, however.

Separately from the news about its Sensitive Content Control changes, the company is rolling out a new “Settings check-up” designed to encourage all teens under 18 on the app to update their safety and privacy settings.

This prompt focuses on pointing teens to tools for adjusting things like who can reshare their content, who can message and content them, and their time spent on Instagram, as well as the Sensitive Content Control settings.

The changes are a part of a broader response in consumer technology about how apps need to do better with regard to how they serve younger users. The E.U., in particular, has had its eye on social apps like Instagram through conditions set under its General Data Protection Regulation (GDPR) and Age Appropriate Design Code. Related to teen usage of its app, Instagram is now awaiting a decision about a complaint over its handling of children’s data in the E.U., in fact. Elsewhere, including in the U.S., lawmakers are weighing options that would further regulate social apps and consumer tech in a similar fashion, including a revamp of COPPA and the implementation of new laws.

In response to the new features, child-friendly policy advocate Common Sense Media‘s founder and CEO Jim Steyer suggested there’s still more Instagram could do to make its app safe.

“The safety measures for minors implemented by Instagram today are a step in the right direction that, after much delay, start to address the harms to teens from algorithmic amplification,” Steyer said, in a prepared statement. “Defaulting young users to a safer version of the platform is a substantial move that could help lessen the amount of harmful content teens see on their feeds. However, the efforts to create a safer platform for young users are more complicated than this one step and more needs to be done.”

He said Instagram should completely block harmful and inappropriate posts from teens’ profiles and should route users to this platform version if it even suspects the user is under 16, despite what the user entered at sign-up. And he pushed Instagram to add more harmful behaviors to its list of “sensitive content,” including content that promotes self-harm and disordered eating.

Instagram says the Sensitive Content Control changes are rolling out now. The Settings check-up, meanwhile, has just entered testing.

English (US) ·

English (US) ·