Meta announced a new set of tools designed to protect young users on Wednesday, an overdue response to widespread criticism that the company doesn’t do enough to protect its most vulnerable users.

Parents, tech watchdogs and lawmakers alike have long called for the company to do more to keep teens safe on Instagram, which invites anyone older than 13 to sign up for an account.

To that end, Meta is introducing something it calls “Family Center,” a centralized hub of safety tools that parents will be able to tap into to control what kids can see and do across the company’s apps, starting with Instagram.

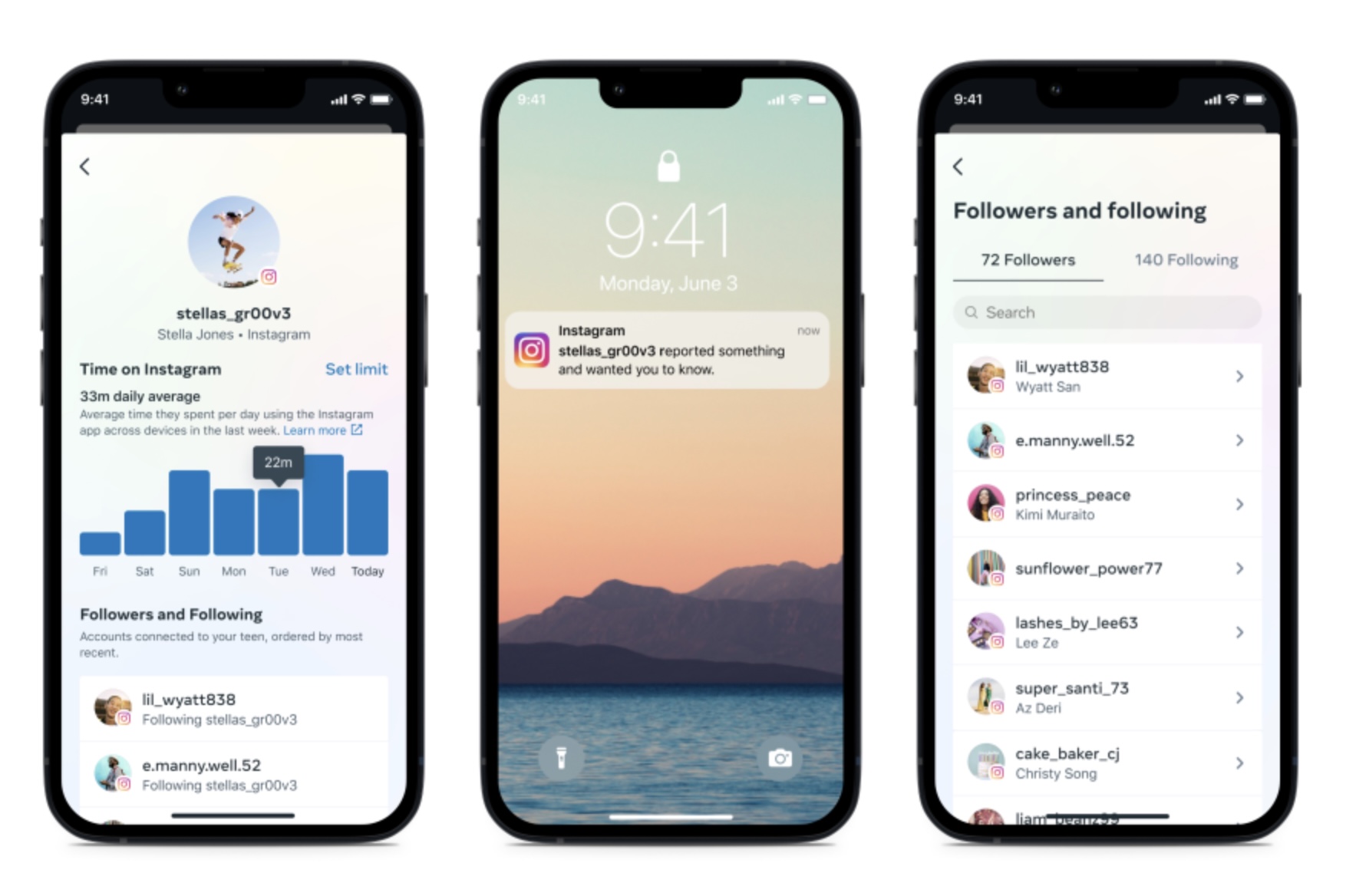

The new set of supervision features lends parents and guardians some crucial transparency into young users’ Instagram habits. The tools will allow parents to monitor how much time a kid spends on the app, be updated about accounts they’ve followed lately and who has followed them and receive notifications about any accounts they’ve reported.

Image Credits: Meta

Those tools will roll out today on Instagram in the U.S. and are on the way to Meta’s VR platform in May and the rest of Meta’s apps (remember Facebook?) some time in the coming months, including to global users. The company characterized the tools as the “first step in a longer-term journey,” though why it took so long to take these initial measures to protect teens from the unsavory side of its software is less clear.

For the time being, teenaged Instagram users will have to enable the safety tools from within their own accounts, though the company says the option for parents to initiate the supervision mode will be implemented by June. Instagram also plans to build out more controls, including a way for parents to restrict app usage to certain hours and a setting that would allow multiple parents to co-supervise an account.

Young and vulnerable

In the last year, Instagram has faced intense scrutiny over its lack of robust safety features designed to protect young users. It technically doesn’t allow anyone under the age of 13 to sign up for the app, though there are few obstacles preventing kids from using social media.

Instagram previously announced that it would apply AI and machine learning to the problem of making sure its users are 13 and older, but the company knows that children and tweens still easily find their way onto the app.

“While many people are honest about their age, we know that young people can lie about their date of birth,” the company wrote in a blog post last year. “We want to do more to stop this from happening, but verifying people’s age online is complex and something many in our industry are grappling with.”

The company argues that the challenge of weeding out young users is the reason it planned to build out a version of Instagram designed for kids, which BuzzFeed News revealed early last year. YouTube and TikTok both offer versions of their own social apps customized for children under 13, though Instagram’s own plans look a bit late to the party.

Last September, The Wall Street Journal published an investigative series reporting on the app’s negative impact on the mental health of teen girls, a scandal that hastened the (temporary?) end of Instagram for Kids. Meta went on to pause plans for Instagram for Kids in light of the WSJ reporting, public backlash and an aggressive, unusually bipartisan pushback from industry regulators.

Meta is also facing pressure from the competition. TikTok introduced its own tools to allow parents to monitor their kids’ app usage two years ago and updated those controls to be more granular since. The company launched its own kid-mode app “TikTok for Younger Users,” which restricts risky features like messaging and commenting, all the way back in 2019.

English (US) ·

English (US) ·