The generative AI landscape grows larger by the day.

Today, Meta announced a new family of AI models, Llama 2, designed to drive apps such as OpenAI’s ChatGPT, Bing Chat and other modern chatbots. Trained on a mix of publicly available data, Meta claims that Llama 2’s performance improves significantly over the previous generation of Llama models.

Llama 2 is the follow-up to Llama, speaking of — a collection of models that could generate text and code in response to prompts, comparable to other chatbot-like systems. But Llama was only available by request; Meta decided to gate access to the models for fear of misuse. (Despite this precautionary measure, Llama later leaked online and spread across various AI communities.)

By contrast, Llama 2 — which is free for research and commercial use — will be available for fine-tuning on AWS, Azure and Hugging Face’s AI model hosting platform in pretrained form. And it’ll be easier to run, Meta says — optimized for Windows thanks to an expanded partnership with Microsoft as well as smartphones and PCs packing Qualcomm’s Snapdragon system-on-chip. (Qualcomm says it’s working to bring Llama 2 to Snapdragon devices in 2024.)

So how does Llama 2 differ from Llama? In a number of ways, all of which Meta highlights in a lengthy whitepaper.

Llama 2 comes in two flavors, Llama 2 and Llama 2-Chat, the latter of which was fine-tuned for two-way conversations. Llama 2 and Llama 2-Chat come further subdivided into versions of varying sophistication: 7 billion parameter, 13 billion parameter and 70 billion parameter. (“Parameters” are the parts of a model learned from training data and essentially define the skill of the model on a problem, in this case generating text.)

Llama 2 was trained on two million tokens, where “tokens” represent raw text — e.g. “fan,” “tas” and “tic” for the word “fantastic. That’s nearly twice as many as Llama was trained on (1.4 trillion), and — generally speaking — the more tokens, the better where it comes to generative AI. Google’s current flagship large language model (LLM), PaLM 2, was reportedly trained on 3.6 million tokens, and it’s speculated that GPT-4 was trained on trillions of tokens, as well.

Meta doesn’t reveal the specific sources of the training data in the whitepaper, save that it’s from the web, mostly in English, not from the company’s own products or services and emphasizes text of a “factual” nature.

I’d venture to guess that the reluctance to reveal training details is rooted not only in competitive reasons, but in the legal controversies surrounding generative AI. Just today, thousands of authors signed a letter urging tech companies to stop using their writing for AI model training without permission or compensation.

But I digress. Meta says that in a range of benchmarks, Llama 2 models perform slightly worse than the highest-profile closed-source rivals, GPT-4 and PaLM 2, with Llama 2 coming significantly behind GPT-4 in computer programming. But human evaluators find Llama 2 roughly as “helpful” as ChatGPT, Meta claims; Llama 2 answered on par across a set of roughly 4,000 prompts designed to probe for “helpfulness” and “safety.”

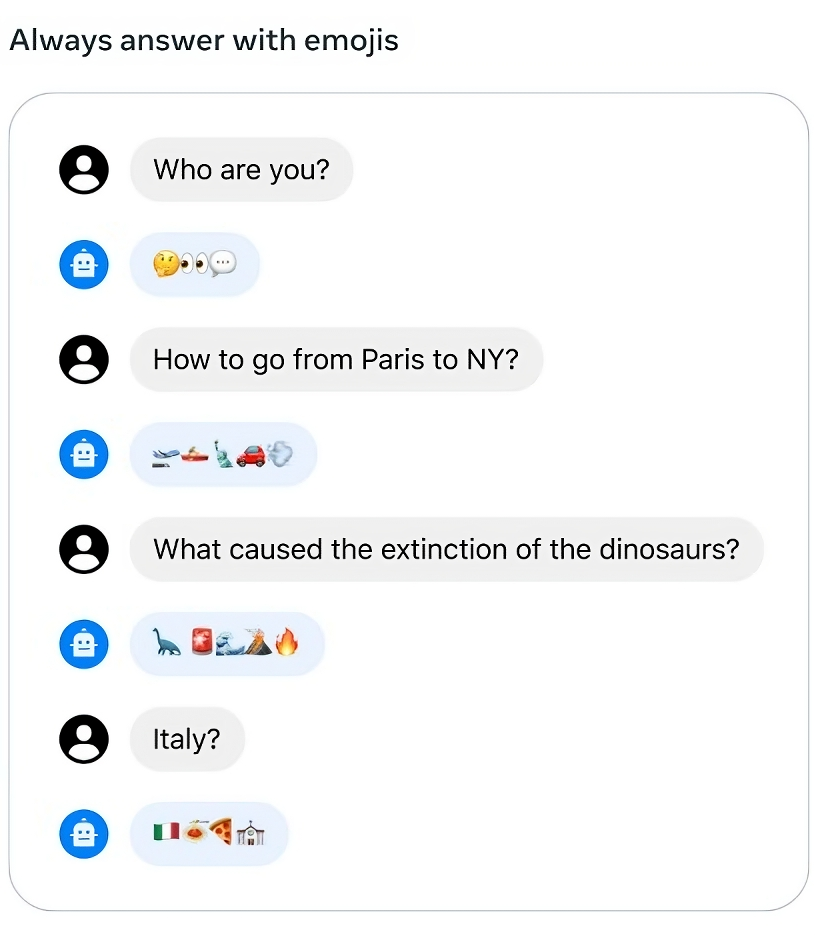

Meta’s Llama 2 models can answer questions — in emoji. Image Credits: Meta

Take the results with a grain of salt, though. Meta acknowledges that its tests can’t possibly capture every real-world scenario and that its benchmarks could be lacking in diversity — in other words, not covering areas like coding and human reasoning sufficiently.

Meta also admits that Llama 2, like all generative AI models, has biases along certain axes. For example, it’s prone to generating “he” pronouns at a higher rate than “she” pronouns thanks to imbalances in the training data. As a result of toxic text in the training data, it doesn’t outperform other models on toxicity benchmarks. And Llama 2 has a Western skew, thanks once again to data imbalances including an abundance of the words “Christian,” “Catholic” and “Jewish.”

The Llama 2-Chat models does better than the Llama 2 models on Meta’s internal “helpfulness” and toxicity benchmarks. But they also tend to be overly cautious, with the models erring on the side of declining certain requests or responding with too many safety details.

To be fair, the benchmarks don’t account for additional safety layers that might be applied to hosted Llama 2 models. As part of its collaboration with Microsoft, for example, Meta’s using Azure AI Content Safety, a service designed to detect “inappropriate” content across AI-generated images and text, to reduce toxic Llama 2 outputs on Azure.

This being the case, Meta still makes every attempt to distance itself from potentially harmful outcomes involving Llama 2, emphasizing in the whitepaper that Llama 2 users must comply with the terms of Meta’s license and acceptable use policy in addition to guidelines regarding “safe development and deployment.”

“We believe that openly sharing today’s large language models will support the development of helpful and safer generative AI too,” Meta writes in a blog post. “We look forward to seeing what the world builds with Llama 2.”

Given the nature of open source models, though, there’s no telling how — or where — the models might be used exactly. With the lightning speed at which the internet moves, it won’t be long before we find out.

English (US) ·

English (US) ·