Two years ago, Microsoft announced Florence, an AI system that it pitched as a “complete rethinking” of modern computer vision models. Unlike most vision models at the time, Florence was both “unified” and “multimodal,” meaning it could (1) understand language as well as images and (2) handle a range of tasks rather than being limited to specific applications, like generating captions.

Now, as a part of Microsoft’s broader, ongoing effort to commercialize its AI research, Florence is arriving as a part of an update to the Vision APIs in Azure Cognitive Services. The Florence-powered Microsoft Vision Services launches today in preview for existing Azure customers, with capabilities ranging from automatic captioning, background removal and video summarization to image retrieval.

“Florence is trained on billions of image-text pairs. As a result, it’s incredibly versatile,” John Montgomery, CVP of Azure AI, told TechCrunch in an email interview. “Ask Florence to find a particular frame in a video, and it can do that; ask it to tell the difference between a Cosmic Crisp apple and a Honeycrisp apple, and it can do that.”

The AI research community, which includes tech giants like Microsoft, have increasingly coalesced around the idea that multimodal models are the best path forward to more capable AI systems. Naturally, multimodal models — models that, once again, understand multiple modalities, such as language and images or videos and audio — are able to perform tasks in one shot that unimodal models simply cannot (e.g., captioning videos).

Why not string several “unimodal” models together to achieve the same end, like a model that understands only images and another that understands exclusively language? A few reasons, the first being that multimodal models in some cases perform better at the same task than their unimodal counterpart thanks to the contextual information from the additional modalities. For example, an AI assistant that understands images, pricing data and purchasing history is likely to offer better-personalized product suggestions than one that only understands pricing data.

The second reason is, multimodal models tend to be more efficient from a computational standpoint — leading to speedups in processing and (presumably) cost reductions on the backend. Microsoft being the profit-driven business that it is, that is, no doubt, a plus.

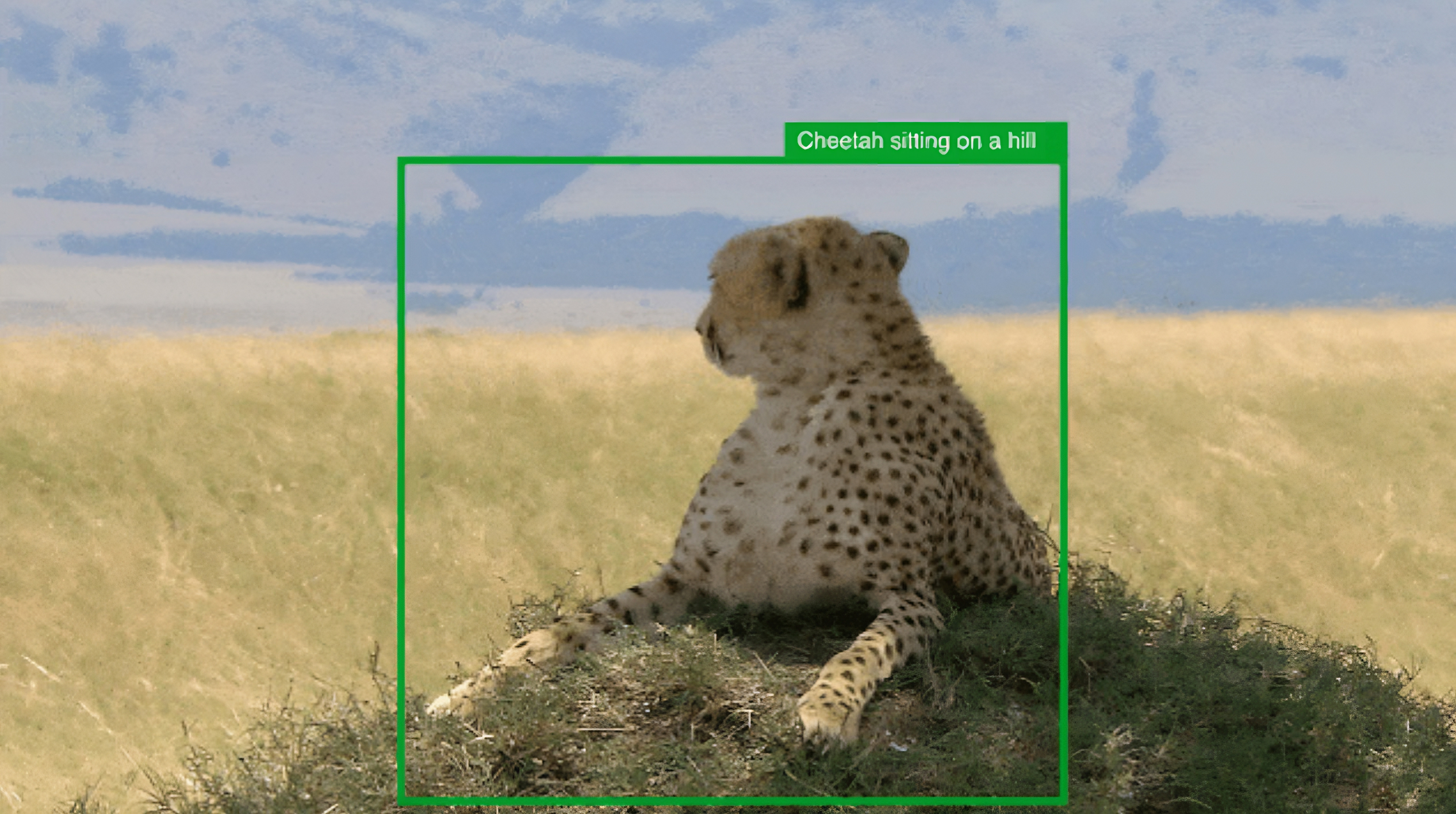

So what about Florence? Well, because it understands images, video and language and the relationships between those modalities, it can do things like measure the similarity between images and text or segment objects in a photo and paste them onto another background.

I asked Montgomery which data Microsoft used to train Florence — a timely question, I though, in light of pending lawsuits that could decide whether AI systems trained on copyrighted data, including images, are in violation of the rights of intellectual property holders. He wouldn’t give specifics, save that Florence uses “responsibly-obtained” data sources “including data from partners.” In addition, Montgomery said that Florence’s training data was scrubbed of potentially problematic content — another all-too-common feature of public training datasets.

“When using large foundational models, it is paramount to assure the quality of the training dataset, to create the foundation for the adapted models for each Vision task,” Montgomery said. “Furthermore, the adapted models for each Vision task has been tested for fairness, adversarial and challenging cases and implement the same content moderation services we’ve been using for Azure Open AI Service and DALL-E.”

Image Credits: Microsoft

We’ll have to take the company’s word for it. Some customers are, it seems. Montgomery says that Reddit will use the new Florence-powered APIs to generate captions for images on its platform, creating “alt text” so users with vision challenges can better follow along in threads.

“Florence’s ability to generate up to 10,000 tags per image will give Reddit much more control over how many objects in a picture they can identify and help generate much better captions,” Montgomery said. “Reddit will also use the captioning to help all users improve article ranking for searching for posts.”

Microsoft is also using Florence across a swath of its own platforms, products and services.

On LinkedIn, as on Reddit, Florence-powered services will generate captions to edit and support alt text image descriptions. In Microsoft Teams, Florence is driving video segmentation capabilities. PowerPoint, Outlook and Word are leveraging Florence’s image captioning abilities for automatic alt text generation. And Designer and OneDrive, courtesy of Florence, have gained better image tagging, image search and background generation.

Montgomery sees Florence being used by customers for much more down the line, like detecting defects in manufacturing and enabling self-checkout in retail stores. None of those use cases require a multimodal vision model, I’d note. But Montgomery asserts that multimodality adds something valuable to the equation.

“Florence is a complete re-thinking of vision models,” Montgomery said. “Once there’s easy and high-quality translation between images and text, a world of possibilities opens up. Customers will be able to experience significantly improved image search, to train image and vision models and other model types like language and speech into entirely new types of applications and to easily improve the quality of their own customized versions.”

Microsoft’s computer vision model will generate alt text for Reddit images by Kyle Wiggers originally published on TechCrunch

English (US) ·

English (US) ·