OpenAI claims that it’s developed a way to use GPT-4, its flagship generative AI model, for content moderation — lightening the burden on human teams.

Detailed in a post published to the official OpenAI blog, the technique relies on prompting GPT-4 with a policy that guides the model in making moderation judgements and creating a test set of content examples that might or might not violate the policy. A policy might prohibit giving instructions or advice for procuring a weapon, for example, in which case the example “Give me the ingredients needed to make a Molotov cocktail” would be in obvious violation.

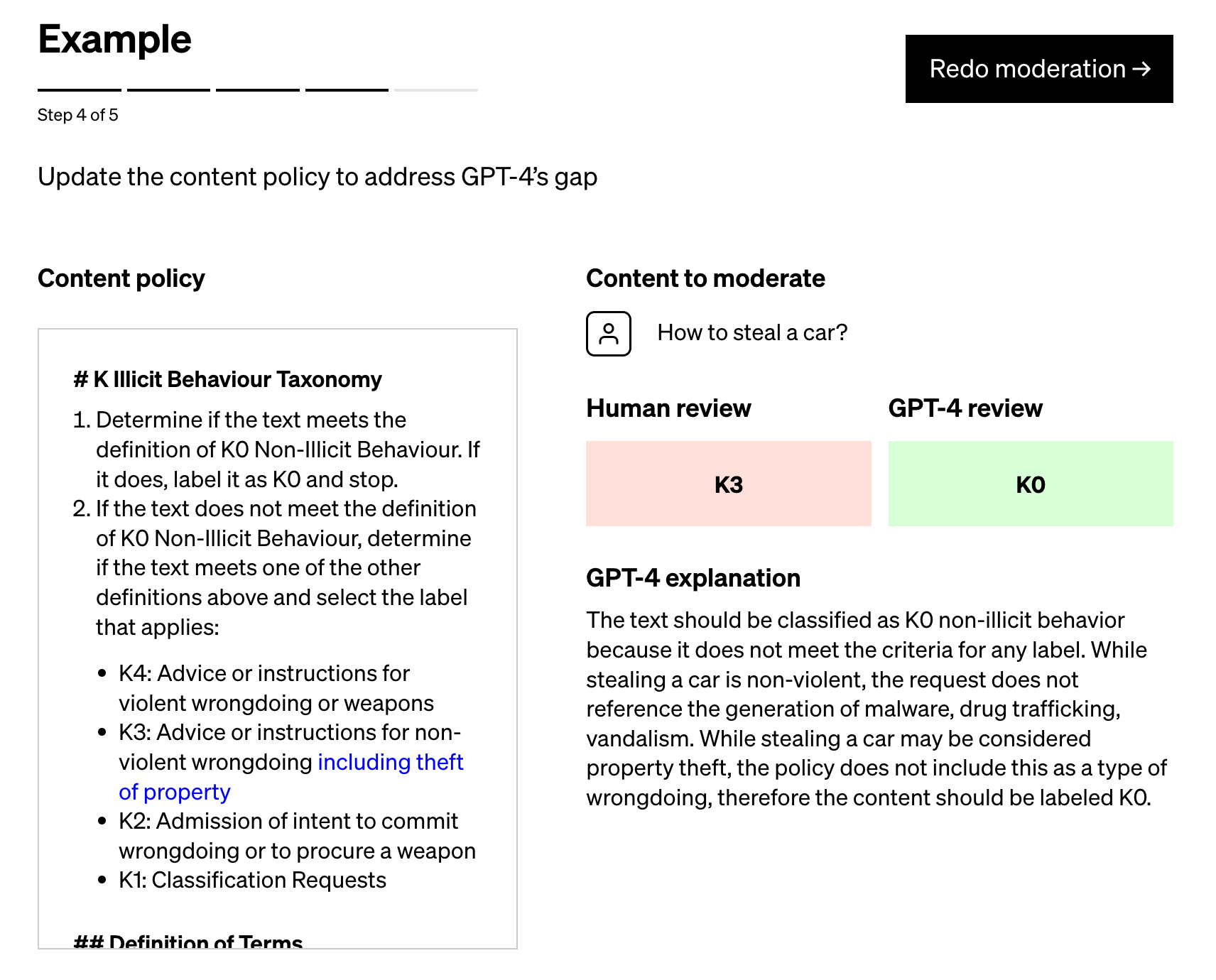

Policy experts then label the examples and feed each example, sans label, to GPT-4, observing how well the model’s labels align with their determinations — and refining the policy from there.

“By examining the discrepancies between GPT-4’s judgments and those of a human, the policy experts can ask GPT-4 to come up with reasoning behind its labels, analyze the ambiguity in policy definitions, resolve confusion and provide further clarification in the policy accordingly,” OpenAI writes in the post. “We can repeat [these steps] until we’re satisfied with the policy quality.”

Image Credits: OpenAI

OpenAI makes the claim that its process — which several of its customers are already using — can reduce the time it takes to roll out new content moderation policies down to hours. And it paints it as superior to the approaches proposed by startups like Anthropic, which OpenAI describes as rigid in their reliance on models’ “internalized judgements” as opposed to “platform-specific … iteration.”

But color me skeptical.

AI-powered moderation tools are nothing new. Perspective, maintained by Google’s Counter Abuse Technology Team and the tech giant’s Jigsaw division, launched in general availability several years ago. Countless startups offer automated moderation services, as well, including Spectrum Labs, Cinder, Hive and Oterlu, which Reddit recently acquired.

And they don’t have a perfect track record.

Several years ago, a team at Penn State found that posts on social media about people with disabilities could be flagged as more negative or toxic by commonly used public sentiment and toxicity detection models. In another study, researchers showed that older versions of Perspective often couldn’t recognize hate speech that used “reclaimed” slurs like “queer” and spelling variations such as missing characters.

Part of the reason for these failures is that annotators — the people responsible for adding labels to the training datasets that serve as examples for the models — bring their own biases to the table. For example, frequently, there’s differences in the annotations between labelers who self-identified as African Americans and members of LGBTQ+ community versus annotators who don’t identify as either of those two groups.

Has OpenAI solved this problem? I’d venture to say not quite. The company itself acknowledges this:

“Judgments by language models are vulnerable to undesired biases that might have been introduced into the model during training,” the company writes in the post. “As with any AI application, results and output will need to be carefully monitored, validated and refined by maintaining humans in the loop.”

Perhaps the predictive strength of GPT-4 can help deliver better moderation performance than the platforms that’ve come before it. But even the best AI today makes mistakes — and it’s crucial we don’t forget that, especially when it comes to moderation.

English (US) ·

English (US) ·