Run.ai, the well-funded service for orchestrating AI workloads, made a name for itself in the last couple of years by helping its users get the most out of their GPU resources on-premises and in the cloud to train their models. But it’s no secret that training models is one thing while putting them into production is another — and that’s where a lot of these projects still fail. It’s maybe no surprise then that the company, which sees itself as an end-to-end platform, is now moving beyond training to also support its customers in running their inferencing workloads as efficiently as possible, whether that’s in a private or public cloud, or on the edge. With this, the company’s platform now also offers an integration with Nvidia’s Triton Inference Server software, thanks to a close partnership between the two companies.

“One of the things that we that we identified in the last 6 to 12 months is that organizations are starting to move from building and training machine learning models to actually having those models in production,” Run.ai co-founder and CEO Omri Geller told me. “We started to invest a lot of resources internally in order to crack this challenge as well. We believe that we cracked the training part and built the right resource management there, so we are now focused now on helping organizations manage their compute resources for inferencing, as well.”

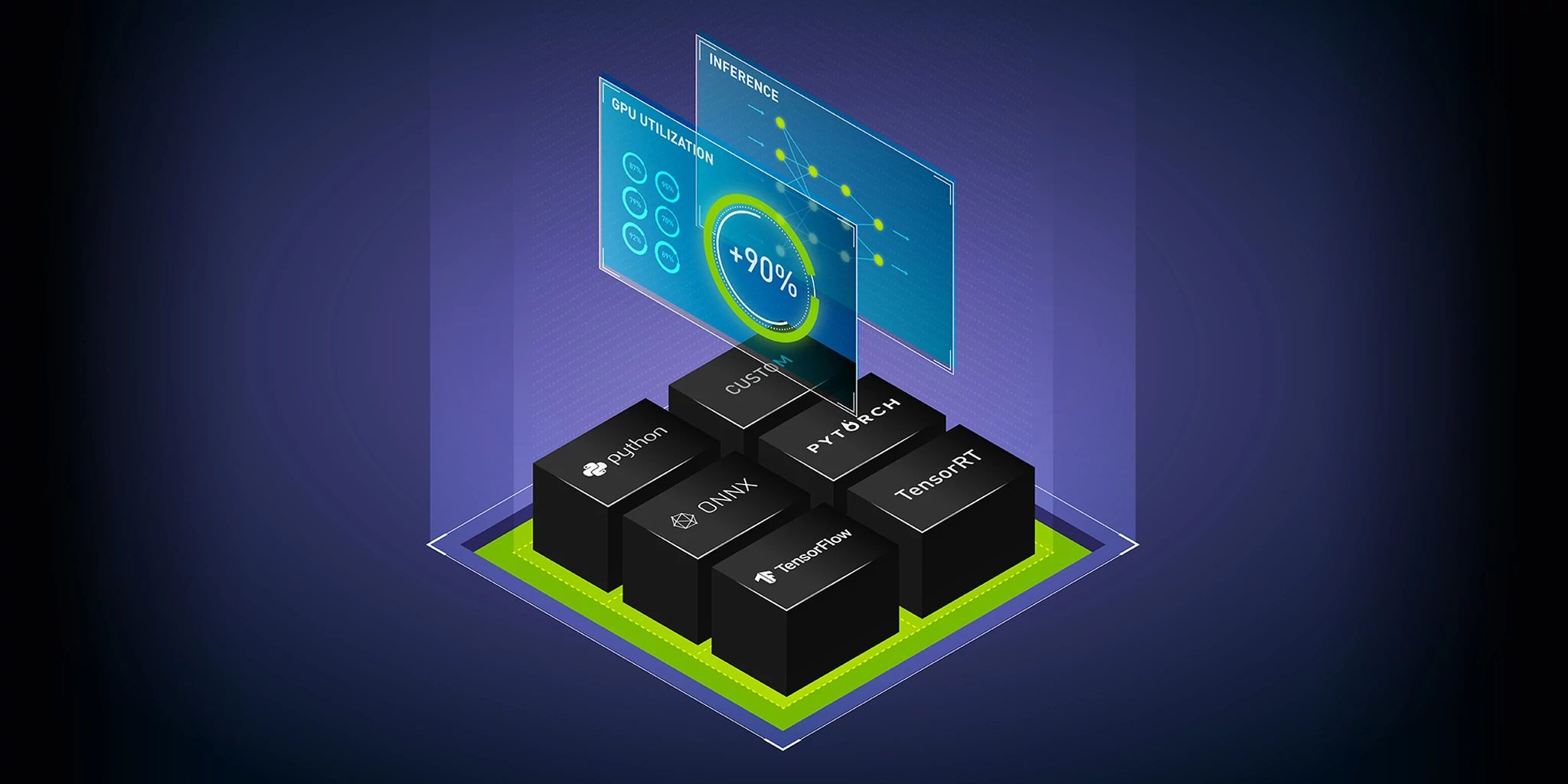

The idea here is to make it as easy as possible for businesses to deploy their models. Run.ai promises a two-step deployment process that doesn’t involve writing YAML files. Thanks to Run.ai’s early bet on containers and Kubernetes, it is now able to move these inferencing workloads on the most efficient hardware and with the new Nvidia integration into the Run.ai Atlas platform, users can even deploy multiple models — or instances of the same model — on the Triton Inference Server, with Run.ai, which is also part of Nvidia’s LaunchPad program, handling the auto-scaling and prioritization on a per-model basis.

While inferencing doesn’t require the same kinds of massive compute resources it takes to train a model, Nvidia’s Manuvir Das, the company’s VP of enterprise computing, noted that these models are becoming increasingly large and deploying those on a CPU just isn’t possible. “We built this thing called Triton Inference Server, which is about doing your inference not just on CPUs but also on GPUs — because the power of the GPU has begun to matter for the inference,” he explained. “It used to be you needed the GPU to do the training and once you’ve got the models, you could happily deploy them on CPUs. But more and more, the models have become bigger and more complex. So you need to actually run them on the GPU.”

And as Geller added, models will only get more complex over time. He noted that there is, after all, a direct correlation between the computational complexity of models and their accuracy — and hence the problems businesses can solve with those models.

Even though Run.ai’s early focus was on training, the company was able to take a lot of the technologies it built for that and apply them to inferencing as well. The resource-sharing systems the company built for training, for example, also apply to inferencing, where certain models may need more resources to be able to run in real time.

Now, you may think that these are capabilities that Nvidia, too, could maybe built into its Triton Inference Server, but Das noted that this is not the way the company is approaching the market. “Anybody doing data science at scale needs a really good end-to-end ML ops platform to do the whole thing,” he said. “That’s what Run.ai does well. And then what we do underneath, we provide the low-level constructs to really utilize the GPU individually really well and then if we integrate it right, you get the best of both things. That’s one reason why we worked well together because the separation of responsibilities has been clear to both of us from the beginning.”

It’s worth noting that in addition to the Nvidia partnership, Run.ai also today announced a number of other updates to its platform. These include new inference-focused metrics and dashboards, as well as the ability to deploy models on fractional GPUs and auto-scaling them based on their individual latency Service Level Agreements. The platform can now also scale deployments all the way to zero — and hence reduce cost.

English (US) ·

English (US) ·