Spotify has bigger plans for the technology behind its new AI DJ feature after seeing positive consumer reaction to the new feature. Launched just ahead of the company’s Stream On event in L.A. last week, the AI DJ curates a personalized selection of music combined with spoken commentary delivered in a realistic-sounding, AI-generated voice. But under the hood, the feature leverages the latest in AI technologies and Large Language Models, as well as generative voice — all of which are layered on top of Spotify’s existing investments in personalization and machine learning.

These new tools don’t necessarily have to be limited to a single feature, Spotify believes, which is why it’s now experimenting with other applications of the technology.

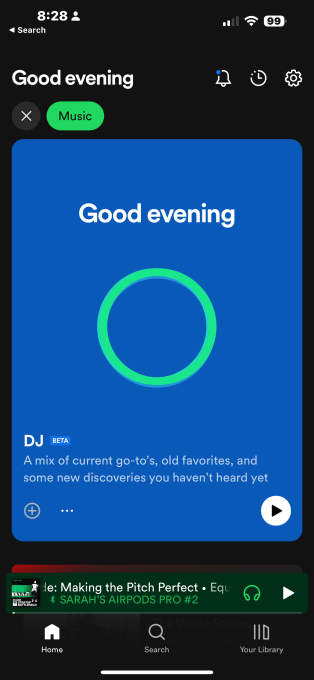

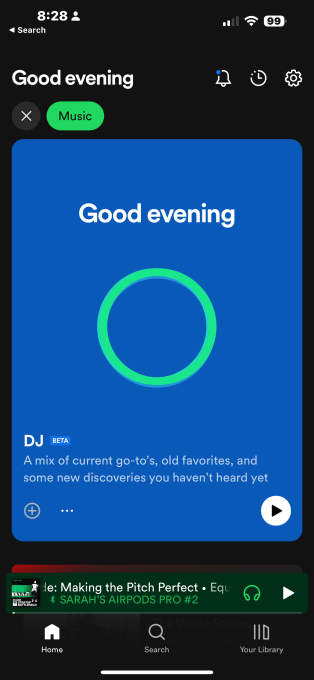

Though the highlight from Spotify’s Stream On event was the mobile app’s revamp, which now focuses on TikTok-like discovery feeds for music, podcasts, and audiobooks, the AI DJ is now a prominent part of the streaming service’s new experience. Introduced in late February to Spotify’s Premium subscribers in the U.S. and Canada, the DJ is designed to get to know users so well that it could play whatever you want to hear with a press of a button.

With the app’s revamp, the DJ will appear at the top of the screen under the Music sub-feed for subscribers, serving both as a lean-back way to stream favorite music and as means to push free users to upgrade.

To create the commentary that accompanies the music the DJ streams, Spotify says it leveraged its own in-house music experts’ knowledge base and insights. Using OpenAI’s Generative AI technology, the DJ is then able to scale their commentary to the app’s end users. And unlike ChatGPT, which is trying to create answers by distilling information found on the wider web, Spotify’s more limited database of musical knowledge ensures the DJ’s commentary ends up being both relevant and accurate.

The actual music selections chosen by the DJ come from its existing understanding of a user’s tastes and interests, mirroring what would have before been programmed into personalized playlists, like Discover Weekly and others.

The AI DJ’s voice, meanwhile, was created using technology Spotify acquired from Sonatic last year and is based on that of Spotify’s Head of Cultural Partnerships Xavier “X” Jernigan, host of Spotify’s now-defunct morning show podcast, “The Get Up.” Surprisingly, the voice sounds incredibly realistic and not at all robotic. (During Spotify’s live event, Jernigan spoke alongside his AI double and the differences were difficult to spot. “I can listen to my voice all day,” he joked).

“The reason it sounds this good — that is actually the aim of the Sonatic technology, the team which we acquired. It is about the emotion in the voice,” explains Spotify’s Head of Personalization, Ziad Sultan, in a conversation with TechCrunch after Stream On wrapped. “When you hear the AI DJ, you will hear where the pause is for breathing. You will hear the different intonations. You can hear excitement for certain types of genres,” he says.

A natural-sounding AI voice isn’t new, of course — Google wowed the world with its own human-sounding AI creation years ago. But its implementation within Duplex led to criticism, as the AI dialed businesses on behalf of the end user, initially without disclosing it wasn’t a real person. There should be no such similar concern with Spotify’s feature, given it’s even called an “AI DJ.”

To make Spotify’s AI voice sound natural, Jernigan went into the studio to produce high-quality voice recordings, while working with experts in voice technology. There, he was instructed to read various lines using different emotions, which are then fed into the AI model. Spotify wouldn’t say how long this process takes, or detail the specifics, noting that the technology is evolving and referring to it as its “secret sauce.”

“From that high-quality input that has a lot of different permutations, [Jernigan] then doesn’t need to say anything anymore — now it is purely AI-generated,” says Sultan of the generated voice. Still, Jernigan will sometimes pop in Spotify’s writers’ room to offer feedback on how he’d read a line to ensure he has continuing input.

Image Credits: Spotify screenshot

But while the AI DJ is built using a combination of Sonatic and OpenAI technology, Spotify is also investing in in-house research to better understand the latest in AI and Large Language Models.

“We have a research team that works on the latest language models,” Sultan tells TechCrunch. It has a few hundred working on personalization and machine learning, in fact. In the case of the AI DJ, the team is using the OpenAI model, Sultan notes. “But, in general, we have a large research team that is understanding all the possibilities across Large Language Models, across generative voice, across personalization. This is fast-moving,” he says. “We want to be known for our AI expertise.”

Spotify may or may not use its own in-house AI tech to power future developments, however. It may decide it makes more sense to work with a partner, as it’s doing now with OpenAI. But it’s too soon to say.

“We are constantly publishing papers,” Sultan says. “We will be investing in the latest technologies — as you can imagine, in this industry, LLMs are such technology. So we will be developing the expertise.”

With this foundational technology, Spotify can push forward into other areas involving AI, LLMs, and generative AI tech. As to what those areas may be in terms of consumer products, the company won’t yet say. (We have heard that a ChatGPT-like chatbot, however, is among the options being experimented with. But nothing is settled in terms of a launch as it’s one experiment among many others).

“We haven’t announced the exact plans of when we might expand to new markets, new languages, etc. But it’s a technology that is a platform. We can do it and we hope to share more as it evolves,” Sultan says.

Early consumer feedback for AI is promising, Spotify says

The company hadn’t wanted to develop a full suite of AI products because it wasn’t sure what consumer reaction would be to the DJ. Would people want an AI DJ? Would they engage with the feature? None of that was clear. After all, Spotify’s voice assistant (“Hey Spotify“) had been wound down over lack of adoption.

Image Credits: Spotify screenshot

But there were early signs that the DJ feature may do well. Spotify had tested the product internally among employees before launching, and the usage and re-engagement metrics had been “very, very good.”

The public adoption, so far, matches what Spotify saw internally, Sultan tells us. That means there’s potential to spin up future products using the same underlying foundations.

“People are spending hours per day with this product…it helps them with choices, with discovery, it narrates to them the next music they should listen to, and explains to them why… so the reaction — if you check various social media, you will see it’s very positive, it’s emotional,” Sultan says.

In addition, Spotify shared that, on the days users tuned in, they spent 25% of their time listening with the DJ, and more than half of the first-time listeners return to use the feature the very next day. These metrics are early, however, as the feature isn’t 100% rolled out to the U.S. and Canada as of yet. But they are promising, the company believes.

“I think it is one amazing step in building a relationship between really valuable products and users,” Sultan says. But he cautions that the challenge ahead will be to “find the right application and then to build it correctly.”

“In this case, we said this was an AI DJ for music. We created the writers’ room for it. We put it in the hands of users to do exactly the job it was meant to do. It’s working super well. But it is definitely fun to dream about what else we could do and how fast we could do it,” he adds.

Spotify’s new ‘DJ’ feature is the first step into the streamer’s AI-powered future by Sarah Perez originally published on TechCrunch

English (US) ·

English (US) ·