TikTok is continuing its PR offensive to convince the world that it takes its content moderator responsibilities seriously, as the Bytedance-owned social video platform today published its latest Community Guidelines Enforcement Report.

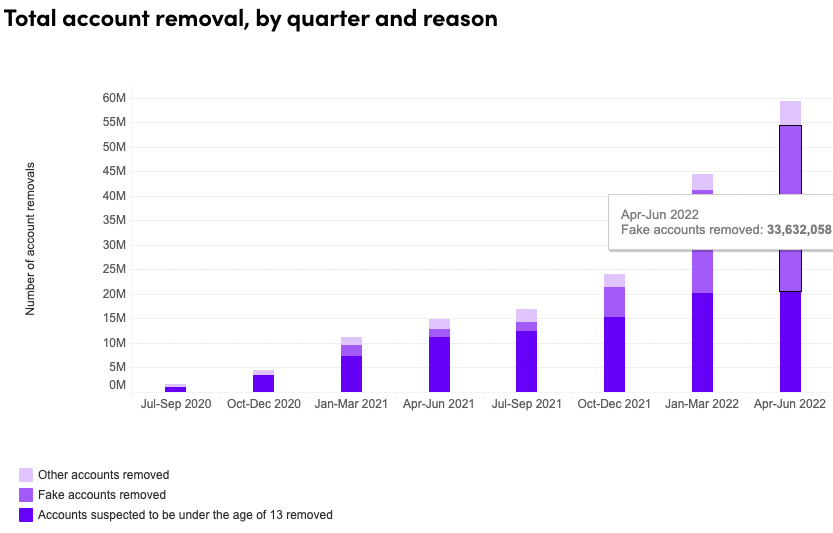

Covering the period from April 1 to June 30 this year, the report spans a wide gamut of self-reported data points around video and account takedowns, arguably most notable among them relating to that of fake accounts. TikTok reports that it removed 33.6 million fake accounts for the quarter, representing a 61% increase on the 20.8 million accounts it removed in the previous quarter. Looking further back to the corresponding second quarter last year shows that TikTok fake account removal rate has grown by more than 2,000% over 12 months.

The definition of a fake account varies, but it generally refers to any account that purports to be someone or something that it’s not — this could mean a celebrity, political figure, brand, or some other scammer with nefarious intentions.

TikTok: Total account removal, by quarter and reason Image Credits: TikTok

What’s perhaps most interesting here is that while its fake account removals has apparently increased, the number of spam accounts blocked at the sign-up stage decreased dramatically, dropping from around 202 million during the first quarter to some 75 million. This is no coincidence, according to TikTok, which says that it has implemented measures to “hide enforcement actions from malicious actors,” essentially to prevent them from gaining insights into TikTok’s detection capabilities.

In short, it seems as though TikTok has allowed more spammy / fake accounts onto the platform, but ultimately removed more once they’re on.

Elsewhere in the report, TikTok said its proactive video removals (where it removes content before it’s reported) rose from 83.6% in Q1 to 89.1% in Q2, while videos removed in under 24 hours (from when a report is received) increased from 71.9% to 83.9%.

Legitimate

TikTok’s rise over the past few years has been fairly rapid, with the company reporting 1 billion active users last year, leading Google to invest in a rival service called YouTube Shorts. And just as the other tech heavyweights have been forced to become content moderators to prevent everything from political chicanery to vaccine misinformation, TikTok has had to fall in line too.

While TikTok has long tried to enhance its credentials by banning deep-fake videos and removing misinformation, with the midterm elections coming up in the U.S., some politicians have voiced concerns about potential interference, either from China (where TikTok’s parent company hails) or elsewhere. Indeed, TikTok recently launched an in-app midterms Elections Center, and shared further plans on how it planned to fight misinformation.

Elsewhere, TikTok has battles on multiple fronts, with news emerging from the U.K. this week that the company is facing a $29 million fine for “failing to protect children’s privacy,” with the Information Commissioner’s Office (ICO) provisionally finding that the company “may have” processed data of children under the age of 13 without parental consent. This followed a planned privacy policy switch in Europe, which TikTok eventually had to pause following regulatory scrutiny.

TikTok says fake account removal increased 61% to 33.6M in Q2 2022 by Paul Sawers originally published on TechCrunch

English (US) ·

English (US) ·