Earlier this year, TikTok said it was developing a new system that would restrict certain types of mature content from being viewed by teen users. Today, the company is introducing the first version of this system, called “Content Levels,” due to launch in a matter of weeks. It’s also preparing the rollout of a new tool that will allow users to filter videos with certain words or hashtags from showing up in their feeds.

Together, the features are designed to give users more control over their TikTok experience while making the app safer, particularly for younger users. This is an area where TikTok today is facing increased scrutiny — not only from regulators and lawmakers who are looking to tighten their grip on social media platforms in general, but also from those seeking justice over social media’s harms.

For instance, a group of parents recently sued TikTok after their children died after attempting dangerous challenges they allegedly saw on TikTok. Meanwhile, former content moderators sued the company for its failure to support their mental health, despite the harrowing nature of their job.

With the new tools, TikTok aims to put more moderation control into the hands of users and content creators.

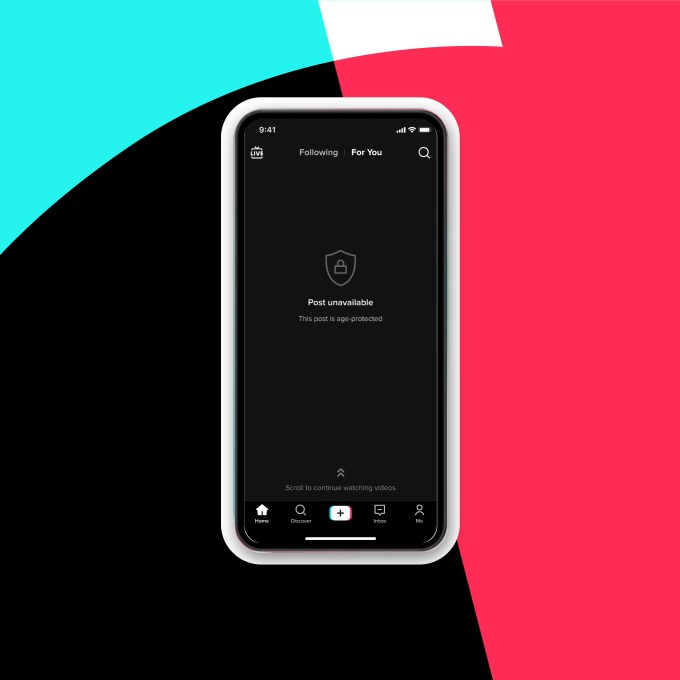

The forthcoming Content Levels system is meant to provide a means of classifying content on the app, similar to how movies, TV shows and video games also feature age ratings.

Image Credits: TikTok Content Levels

Although adult content is banned, TikTok says some content on its app may contain “mature or complex themes that may reflect personal experiences or real-world events that are intended for older audiences.” Its Content Levels system will work to classify this content and assign a maturity score.

In the coming weeks, TikTok will introduce an early version of the Content Levels system designed to prevent content with overtly mature themes from reaching users ages 13 to 17. Videos with mature themes — like fictional scenes that could be too frightening or intense for younger users — will be assigned a maturity score to keep them from being seen by TikTok’s under-18 users. The system will be expanded over time to offer filtering options for the entire community, not just teens.

The maturity score will be assigned by Trust and Safety moderators to videos that are increasing in popularity or those that had been reported on the app, we’re told.

Previously, TikTok said content creators may be asked to tag their content as well, but it has yet to go into detail on this aspect. A spokesperson said that’s a separate effort from what’s being announced today, however.

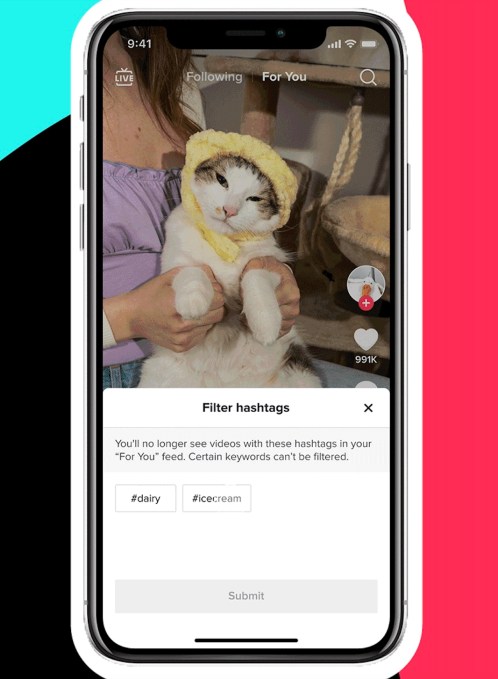

Image Credits: TikTok

In addition, TikTok will soon launch another tool for filtering content from your For You and Following feeds.

This feature will let users manually block videos with certain words or hashtags from their feeds. This doesn’t necessarily need to be used for filtering potentially problematic or triggering content — it could also be used to stop the algorithm from showing you topics you simply don’t care about or have gotten sick of seeing. TikTok suggests you could use it to block dairy or meat recipes if you were going vegan, for example, or to stop seeing DIY tutorials after you completed the referenced home project.

Image Credits: TikTok content filters

Related to these new features, the company said it’s expanding its existing test of a system that works to diversify recommendations in order to prevent users from being repeatedly exposed to potentially problematic content — like videos about extreme dieting or fitness, sadness or breakups.

This test launched last year in the U.S. following a 2021 Congressional inquiry into social apps like TikTok and others as to how their algorithmic recommendation systems could be promoting harmful eating disorder content to younger users.

TikTok admits the system still requires some work due to the nuances involved. For instance, it can be difficult to separate out content focused on recovering from eating disorders, which could have both sad and encouraging themes. The company says it’s currently training this system to support more languages for future expansion to new markets.

As described, this trio of tools could make for a healthier way to engage with the app — but in reality, automated systems like these tend to have failures.

So far, TikTok hasn’t yet been able to tamp down on problematic content in a number of cases — whether it’s kids destroying public school bathrooms, shooting each other with pellet guns or jumping off milk crates, among other dangerous challenges and viral stunts. It has also allowed hateful content that involved misogyny, white supremacy or transphobic statements to fall through the cracks at times, along with misinformation.

To what extent TikTok’s new tools actually make an impact on who sees what content still remains to be seen.

“As we continue to build and improve these systems, we’re excited about the opportunity to contribute to long-running industry-wide challenges in terms of building for a variety of audiences and with recommender systems,” wrote TikTok Head of Trust and Safety Cormac Keenan in a blog post. “We also acknowledge that what we’re striving to achieve is complex and we may make some mistakes,” he added.

English (US) ·

English (US) ·