The UK’s media watchdog, Ofcom, has published a debut report on its first year regulating a selection of video-sharing platforms (VSPs) — including TikTok, Snapchat, Twitch, Vimeo and OnlyFans — following the introduction of content-handling rules aimed at protecting minors and others from viewing harmful user-generated video content online.

As well aiming to shrink the risk of minors being exposed to age-inappropriate content (an area the UK’s data protection watchdog also has under watch), the VSP regulation requires in-scope Internet platforms to take steps to protect all their users from content likely to incite violence or hatred against protected groups or which would be considered a criminal offence under laws related to terrorism, child sexual abuse material, racism and xenophobia.

It’s a taster of a broader (and more controversial) online content regulation that’s been years in the making — aka the Online Safety Bill — which remains in limbo after the new UK prime minister, and her freshly appointed minister heading up digital issues, paused the draft legislation last month saying they wanted to tweak the approach in response to freedom of expression concerns.

There are questions about whether the Online Safety Bill will/can survive the UK’s stormy domestic politic situation. Which means the VSP regulation may end up sticking around longer (and doing more heavy lifting) than originally envisaged — if, as now looks likely, it takes the government rather more time than was originally envisaged to legislate for the wider online safety rules that ministers have been planning since 2018.

The draft Online Safety bill, which sets up Ofcom as the UK’s chief internet content regulator, had already attracted plenty of controversy — and become cluttered with add-ons and amendments — before it got parked by new secretary of state for digital, Michelle Donelan, last month. (Just before new prime minister, Liz Truss, unleashed a flurry of radical libertarian economic policies that succeeded in spooking the financial markets and parking her fledgling authority, generating a fresh domestic political crisis. So the bill’s fate — like the government’s — remains tbc.

In the meanwhile, Ofcom is getting on with regulating the subset of digital services it can. And under the VSP regulation it’s empowered to act against video-sharing platforms that fail to act on online harms by putting in place appropriate governance systems and processes — including by issuing enforcement notifications that require a platform to take specified actions and/or by imposing a financial penalty (although its guidance says it will “usually attempt to work with providers informally first to try to resolve compliance concerns”) — so some digital businesses are already undergoing regulatory oversight in the UK vis-a-vis their content moderation practices.

A regulatory gap remains, though — with the VSP regulation applying only to a subset of video-sharing platforms (NB: on-demand video platforms like streaming services fall under separate UK regulations).

Hence — presumably — social media sites like Instagram and Twitter not being on the list of VSPs which have notified Ofcom they fall within the regime’s jurisdiction, despite both allowing users to share their own videos (videos that may, in the case of Twitter, include adult content as its T&Cs permit such content) — while, in the other camp, the likes of TikTok, Snapchat and Twitch have notified themselves as subject to the rules. (We asked Ofcom about how/whether notification criteria would apply to non-notified platforms like Twitter but at the time of writing it had not responded to our questions.)

Bottom line: It is up to platforms to self-assess whether the VSP regulation applies to them. And as well as the better known platforms listed above, the full list of (currently) nineteen “notified video-sharing platforms” spans a grab-bag of companies and content themes, including a number of smaller, UK-based adult-themed content/creator sites; gaming streamer and extreme sports platforms; social shopping, networking and tourism apps; and a couple of ‘current affairs’ video sites, including conspiracy and hate-speech magnet, BitChute, and a smaller (UK-founded) site that also touts “censorship-free” news.

Ofcom’s criteria for notification for the VSP regulation has a number of provisions but emphasizes that providers should “closely consider” whether their service has the “principal purpose of providing videos to the public”, either as a whole or in a “dissociable section”; and pay mind to whether video provision is an “essential functionality” of their service as a whole, meaning video contributes “significantly” to the commercial and functional value.

Given it’s early days for the regulation — which came into effect in November 2020, although Ofcom’s guidance and plan for overseeing the rules wasn’t published until October 2021 which is why it’s only now reporting on its first year of oversight — and given platforms being left to self-assess if they fall in-scope, the list may not be entirely comprehensive as yet; and more existing services that don’t appear on the list could be added as the regime progresses.

Including by Ofcom itself — which can use its powers to request information and assess a service if it suspects it meets the statutory criteria but has not self-notified. It can also take enforcement action over a lack of notification — ordering a service to notify and financially sanctioning those that fail to do so — so video sharing services that try to evade the rules by pretending they don’t apply aren’t likely to go unnoticed for too long.

So what’s the regulator done so far in this first phase of oversight? Ofcom says it’s used its powers to gather information from notified platforms about what they are doing to protect users from harm online.

Its report offers a round-up of intel on how listed companies tackle content challenges — offering nuggets like TikTok relies “predominantly on proactive detection of harmful video content” (aka automation) rather than “reactive user reporting”, with the latter said to lead to just 4.6% of videos removed. And Twitch is unique among the regulated VSPs in enforcing on-platform sanctions (such as account bans) for “severe” off-platform conduct, such as terrorist activities or sexual exploitation of children.

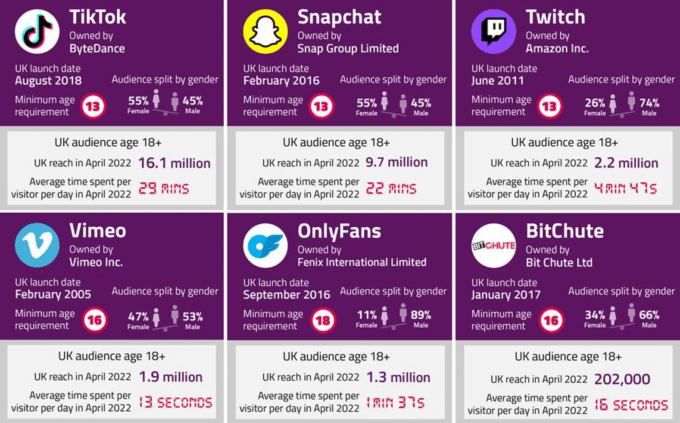

The six biggest notified UK-established video-sharing platforms (Image credit: Ofcom)

Snapchat is currently the only partially regulated video sharing service of those being overseen by Ofcom — since only two areas of the app are currently notified as in scope: namely Spotlight and Discover, two trending/topical content feeds that can contain user generated video.

Ofcom’s report also highlights that Snap uses “tools” (no further detail is offered) to estimate the age of users on its platform to try to identify underage users who misrepresented their age on sign-up (i.e. by entering a false birth date, as it does not require harder age verification).

“This is based on a number of factors and is used to stop users suspected to be under 18 from seeing content that is inappropriate for them, such as advertisements of regulated goods. However, Snap did not disclose whether this information is used to validate information about the age of its users or whether it would prompt further investigation of the user,” it goes on in further detail-light remarks — highlighting that its first year of information gathering on platforms’ processes still has plenty of blanks to fill in.

Nonetheless, the regulator is planting a flag with this first report — one that signifies it’s arrived at base camp and is bedding down and preparing to spend as long as it takes to conquer the content regulation mountain looming ahead.

“Ofcom is one of the first regulators in Europe to do this,” it emphasizes in a press release accompanying the report’s publication. “Today’s report is the first of its kind under these laws and reveals information previously unpublished by these companies.”

Commenting in a statement, Ofcom’s CEO, Melanie Dawes, added:

“Today’s report is a world first. We’ve used our powers to lift the lid on what UK video sites are doing to look after the people who use them. It shows that regulation can make a difference, as some companies have responded by introducing new safety measures, including age verification and parental controls.

“But we’ve also exposed the gaps across the industry, and we now know just how much they need to do. It’s deeply concerning to see yet more examples of platforms putting profits before child safety. We have put UK adult sites on notice to set out what they will do to prevent children accessing them.”

Age verification for adult sites

Top of Ofcom’s concerns after its first pass at gathering intel on how VSPs are approaching harmful content is a lack of robust age-verification measures on UK-based adult-themed content sites — which the regulator says it wants to see applied to prevent child accessing pornography.

“Ofcom is concerned that smaller UK-based adult sites do not have robust measures in place to prevent children accessing pornography. They all have age verification measures in place when users sign up to post content. However, users can generally access adult content just by self-declaring that they are over 18,” it warns, adding that it was told by one smaller adult platform it had considered implementing age verification but had decided not to as it would reduce its profitability (its report does not name the platform in question in that case).

The report credits the VSP regulation with compelling the (predominantly) adult content creator site, OnlyFans, with adopting age verification for all new UK subscribers — which it says the platform has done using third-party tools, provided by (UK) digital identity startups Yoti and Ondato.

(Quick aside: That UK startup name dropping here does not look accidental as it’s where the government’s 2019 manifesto promise — to make the country the safest place in the world to go online — intersects with a (simultaneously claimed) digital growth agenda. The latter policy priority skips over the huge compliance bureaucracy that the online safety regime will land on homegrown tech businesses, making it more costly and risky for all sorts of services to operate (which ofc sounds bad for digital growth), in favor of spotlighting an expanding cottage industry of UK ‘safety’ startups and government-fostered innovators — whose tools may end up being very generally required if the online safety rulebook is to even vaguely deliver as intended — and so (ta-da!) there’s your digital growth!)

Clearly, a push to scale uptake of ‘Made in Britain SafetyTech’ dovetails neatly with Ofcom — in its role as long-anointed (but not yet fully appointed) online harms watchdog — cranking up the pressure on regulated platforms to do more.

Ofcom has, also today, published new research in which it says it found that a large majority of people (81%) do not mind proving their age online in general, and a slightly smaller large majority (78%) expect to have to do so for certain online activities — begging the question which Internet users did it ask exactly; and how did it suggest they’d be asked to prove their age (i.e. constantly, via endless age verification pop-ups interrupting their online activity, or persistently, handing their Internet browsing history to a single digital ID company and praying its security is infallible, say? (But I digress…).

For the record, Ofcom says this research it’s simultaneously publishing has been taken from a number of separate studies — including reports on the VSP Landscape, the VSP Tracker (March 2022), the VSP Parental Guidance research, and the Adults Attitudes to Age-Verification on adult sites research — so there are likely a variety of sentiments and contexts underlying the stats it’s chosen to highlight.

“A similar proportion (80%) feel internet users should be required to verify their age when accessing pornography online, especially on dedicated adult sites,” Ofcom’s PR goes on, warning that it will be stepping up action over the coming year to compel porn sites to adopt age verification tech.

“Over the next year, adult sites that we already regulate must have in place a clear roadmap to implementing robust age verification measures. If they don’t, they could face enforcement action,” it writes. “Under future Online Safety laws, Ofcom will have broader powers to ensure that many more services are protecting children from adult content.”

Earlier this year, the government signalled that mandatory age verification for adult sites would be baked into the Online Safety Bill by bringing porn sites in scope of the legislation to make it harder for children to access or stumble across such content — so Ofcom’s move prefigures that anticipated future law.

Early concerns and changes

The 114-page “first-year” report of Ofcom’s VSP rules oversight goes on to flag a concern about what it describes as the “limited” evidence platforms provided it with (so far) on how well their safety measures are operating. “This creates difficulty in determining with any certainty whether VSPs’ safety measures are working consistently and effectively,” it notes in the executive summary.

It also highlights a lack of adequate preparation for the regulation — saying some platforms are “not sufficiently prepared” or resourced to meet the requirements — and stipulates that it wants to see platforms providing more comprehensive responses to its information requests in the future.

Ofcom also warns over platforms not prioritising risk assessment processes — which its report describes as “fundamental” to proactively identifying and mitigating risks to user safety — further underscoring this will be a key area of focus, as it says: “Risk assessments will be a requirement on all regulated services under the Online Safety regime.”

The regulator says the coming year of its oversight of the VSP regime will concentrate on how platforms “set, enforce, and test their approach to user safety” — including by ensuring they have sufficient systems and processes in place to set out and uphold community guidelines covering all relevant harms; monitoring whether they “consistently and effectively” apply and enforce their community T&Cs; reviewing tools they provide to users to let them control their experience on the platform and also encouraging greater engagement with them; and also driving forward “the implementation of robust age assurance to protect children from the most harmful online content (including pornography)”.

So homegrown safety tech, digital ID and age assurance startups will surely be preparing for a growth year — even if fuller online safety legislation remains frozen in its tracks owing to ongoing UK political instability.

“Our priorities for Year 2 will support more detailed scrutiny of platforms’ systems and processes,” Ofcom adds, before reiterating its expectation of a future “much broader” workload — i.e. if/when the Online Safety Bill returns to the Commons.

As well as OnlyFans adopting age verification for all new UK subscribers, Ofcom’s report flags other positive changes it says have been made by some of the other larger platforms in response to being regulated — noting, for example, that TikTok now categorizes content that may be unsuitable for younger users to prevent them from viewing it; and also pointing to the video-sharing platform establishing an Online Safety Oversight Committee focused on oversight of content and safety compliance within the UK and EU as another creditable step.

It also welcomes a recently launched parental control feature by Snapchat, called Family Center, which lets parents and guardians view a list of their child’s conversations without seeing the content of the message.

While Vimeo gets a thumbs up for now only allowing material rated ‘all audiences’ to be visible to users without an account — with content that’s rated ‘mature’ or ‘unrated’ now automatically put behind a login screen. And Ofcom’s report also notes that the service carried out a risk assessment in response to the VSP regime.

Ofcom further flags changes at BitChute — which it says updated its T&Cs (including adding ‘Incitement to Hatred’ to its prohibited content terms last year), as well as increasing the number of people it has working on content moderation — and the report describes the platform as having engaged “constructively” with Ofcom since the start of the regulation.

Nonetheless, the report acknowledges that plenty more change will be needed for the regime to have the desired impact — and ensure VSPs are taking “effective action to address harmful content” — given how many are “not sufficiently equipped, prepared and resourced for regulation”.

Nor are VSPs prioritising risk assessing their platforms — a measure Ofcom’s report makes clear will be a cornerstone of the UK’s online safety regime.

So, tl;dr, the regulation process is just getting going.

“Over the next twelve months, we expect companies to set and enforce effective terms and conditions for their users, and quickly remove or restrict harmful content when they become aware of it. We will review the tools provided by platforms to their users for controlling their experience, and expect them to set out clear plans for protecting children from the most harmful online content, including pornography,” Ofcom adds.

On the enforcement front, it notes that it has recently (last month) opened a formal investigation into one firm (Tapnet) — which operates adult site RevealMe – after it initially failed to comply with an information request. “While Tapnet Ltd provided its response after the investigation was opened, this has impacted on our ability to comment on RevealMe’s protection measures in this report,” it adds.

UK watchdog gives first report into how video sharing sites are tackling online harms by Natasha Lomas originally published on TechCrunch

English (US) ·

English (US) ·